BI developers, BI managers and business managers struggle with getting consistent, high quality reports on time and on budget. Report developers are challenged managing the day-to-day development load and feel pressure from an ever-growing backlog of requests.

In this on-demand webinar, learn the workflow secrets of high performing report development teams. We explore how they increase the pace of their delivery to meet high demand, look at the flow of report development work and discuss techniques for organizing and managing workload. You can also download our 77-point report development checklist mentioned in the webinar.

Learn how to

- Improve your report-building workflow

- Reduce mistakes, defects and bugs in your reports

- Improve your report delivery rate

- Reduce the backlog of report requests

- Better manage the development and deployment of analytics in your organization

Presenter

Greg Nash

MVP Data Platform | Principal Consultant

Dear Watson Consulting

Greg Nash is a Senturus partner in Australia. He is principal consultant at Dear Watson Consulting, a Microsoft Data Platform MVP and Power BI user group leader based in Melbourne Australia. He has 20 years of experience in enterprise IT and more recently, he’s specializing in Microsoft Power BI adoption, mentoring and development for organizations of all sizes.

Read moreMachine transcript

0:06

Greetings everyone and welcome to this latest instalment of the Senturus Knowledge Series. Today we will be discussing the secrets of high performing report development teams.

0:18

Before we get into the core of the presentation, a few housekeeping items.

0:22

While we mute everyone’s microphones out of consideration for our speaker, we encourage everyone to enter your questions in the questions area of the GoToWebinar control panel to make the session interactive.

0:35

We generally are able to respond to your questions while the webinars is in progress. But if we don’t, for some reason, we’ll cover that in the Q&A section at the end. So, make sure you stick around for that.

0:49

First question we always get early and often is, can I get the presentation? And the answer is an unqualified.

0:55

Yes. It is available on Senturus.com, under the Resources tab and the Resources Library.

1:01

Alternatively, if you look in the chat window of the GoToWebinar control panel, you’ll find a link there, and you can go get it right now.

1:07

While you’re there on the website, be sure to bookmark our resource library, as it has tons of valuable content addressing a wide variety of business analytics topics.

1:17

Our agenda today, after some brief introductions of our speaker, we will discuss the Power BI development workflow.

1:28

Understanding your audience, looking at value streams, roles and responsibilities, and managing the flow.

1:34

After that, we’ll do a brief Senturus’s overview for those of you who may not be familiar with jurists and what we offer.

1:39

We’ll cover some great additional free resources and get to the aforementioned Q&A so again, make sure you stick around for that.

1:47

I’m pleased to be joined today by Greg Nash. Greg is our Senturus partner in Australia, and is a principal consultant at Dear Watson Consulting and Microsoft data platform, MVP.

1:59

And Power BI User Group Leader, who is based in Melbourne, Australia with 20 years of experience in Enterprise IT.

2:06

And more recently, he has specialized in Microsoft Power BI, adoption, mentoring, and development for organizations of all sizes.

2:15

My name is Michael Weinhauer. I’m a director here at Senturus, and among my numerous roles, one of them, I have the pleasure of seeing our knowledge series presentations.

2:25

As usual, before we get into the presentation, we like to get a finger on the pulse of our audience. So, with that, I’m going to present a poll.

2:34

And the question today is, what is the number-one issue you face with your data platform right now?

2:42

Is it tools or training?

2:44

Is it data quality or legacy source systems issues associated with that? Is it data engineering, getting them the ETL or ELT processes in place, user feedback or expectations from the organization?

2:58

Or is it data, culture, or support from the organization?

3:01

So please pick one of those.

3:04

Might take a minute to think about this one.

3:08

And we’ll give you guys just a minute or so to get your answers. About half of you’ve given your responses.

3:17

Give it another 10, minutes here.

3:33

OK, we got about 80% here, so, excuse me, I’m going to go ahead and close this out and share the results.

3:41

So, the biggest one is, lack of, isn’t it, barriers in the data culture, or support from the organization coming in near a third, followed by a quarter of you with data quality issues, then tools are training, user feedback. Our expectation is pretty low, so it’s not too surprising in that, but a fairly even spread between the other four.

4:03

So, interesting, thank you for sharing those insights. Hopefully, you find these

4:08

interesting, and with that, we’ll get into the heart of the presentation here, and I’ll hand the floor over to you, Greg. Greg, it’s all yours.

4:16

Thanks, Michael, and thanks for having me. Welcome, everybody. It’s good to join you. It’s 8 AM here in Australia, and welcome to everybody who’s joined me from Australia as well.

4:27

Today, I wanted to start out with a bit of the grim reality about what we deal with as analysts. And, you know, as Michael mentioned, I come from an enterprise IB type. IT

4:38

background more on the operations side of the fence, and I moved into the BI development in the last 6 or 7 years.

4:45

And, I think the thing that we’ve all, we all know.

4:50

Is that building consistent, high quality, accurate, robust data, data, analytic solutions. It’s kind of hard, right? It’s, it’s not as easy as, maybe a lot of people assume that it is.

5:03

And I wanted to start out with just like looking at some of the challenges that we, we face as BI developers and as analysts, and, and as data platform people.

5:14

So, I’ve got Jerry. He’s an analyst, is obviously got a lot of work, is not very happy, Jerry suffers from a lot of stress, and he is got a lot of unhappy customers. And so, when we talk to the ..

5:28

of the world inside organizations, around the world, we find that there’s, like, a common set of challenges that we see these, these analysts facing.

5:40

The first one, and maybe the most obvious one, in a lot of ways, is, is that data lives in silos. It lives in all sorts of different areas. You have different technologies, in terms of the way that data is stored, in the way that it’s captured it, the way that you access the data. Some of it might be in the cloud.

5:56

Some of it might be on premises, you, and so there’s this complexity associated with where the data is located, where we wanna get to information, and how we access it, as well as the data living in different places. It’s complex, right, with often dealing with complex relationships. The data, it’s not really optimized for analysis, it’s an optimized for transactional systems, and so you’re often, as analysts, we tried to wrangle the relationships between a complex series of tables.

6:23

Maybe the hot data is highly, de normalized, or normalized, So, and you’re, you’re constantly having to wrangle these relationships and understand them, as well as data being complex. People, kind of complex, and the way that they think about data. And the way that they, they interpret data, and the way that they look at reports. And, and, and, and as soon as you sort of deliver a report, people have a whole set of new ideas, or that they’re looking at the same numbers, and interpret things in interpreting them, through their own lens. And so, Jerry’s, you know, constantly having to wrangle this relationship with people.

7:00

He also finds that the goalposts are always changing, so you one minute you’re applying one kind of sport. And then through a sort of a series of actions that no one really understands.

7:09

Suddenly you find you’re playing a totally different game. And this is so common in our, in our analytics world, where, where you think you’re, you’re, you’re, you’re building something that, that delivers one solution, and you find that it’s a completely different requirement altogether.

7:28

And this comes into the, the idea that that these data platform solutions and reporting solutions, the pipeline associated with that the flow of data, into our, into our organization, of the flow of insight, constantly, needs maintenance. And so, Jerry Finds and he’s constantly having to go back and maintain data pipelines you Know entropy means that, you know, all of these pipelines that, you know trending towards failure. And so, that could be a data quality issue. They might be security. Somebody types the wrong information into the wrong field that nobody thought of before. And, and Jerry’s having to go back and fix and maintain these data pipelines to keep these reports up, to date, to keep their data solutions up to date.

8:10

And, really, the only way that he can deliver anything is through Heroics, you know? And so, you have this, this idea, in the data world, or in the reporting world, where, where we see organizations where the really, the, the best way to deliver something is, you know, you maybe, stay up all night, or do something.

8:30

And we really know that a lot of the time, this is, you know, we’re really delivering via hope and preys.

8:37

And so, that sort of leads us into the topic for today, which is, how do the best performing analytics teams deliver high quality content on time and every time?

8:51

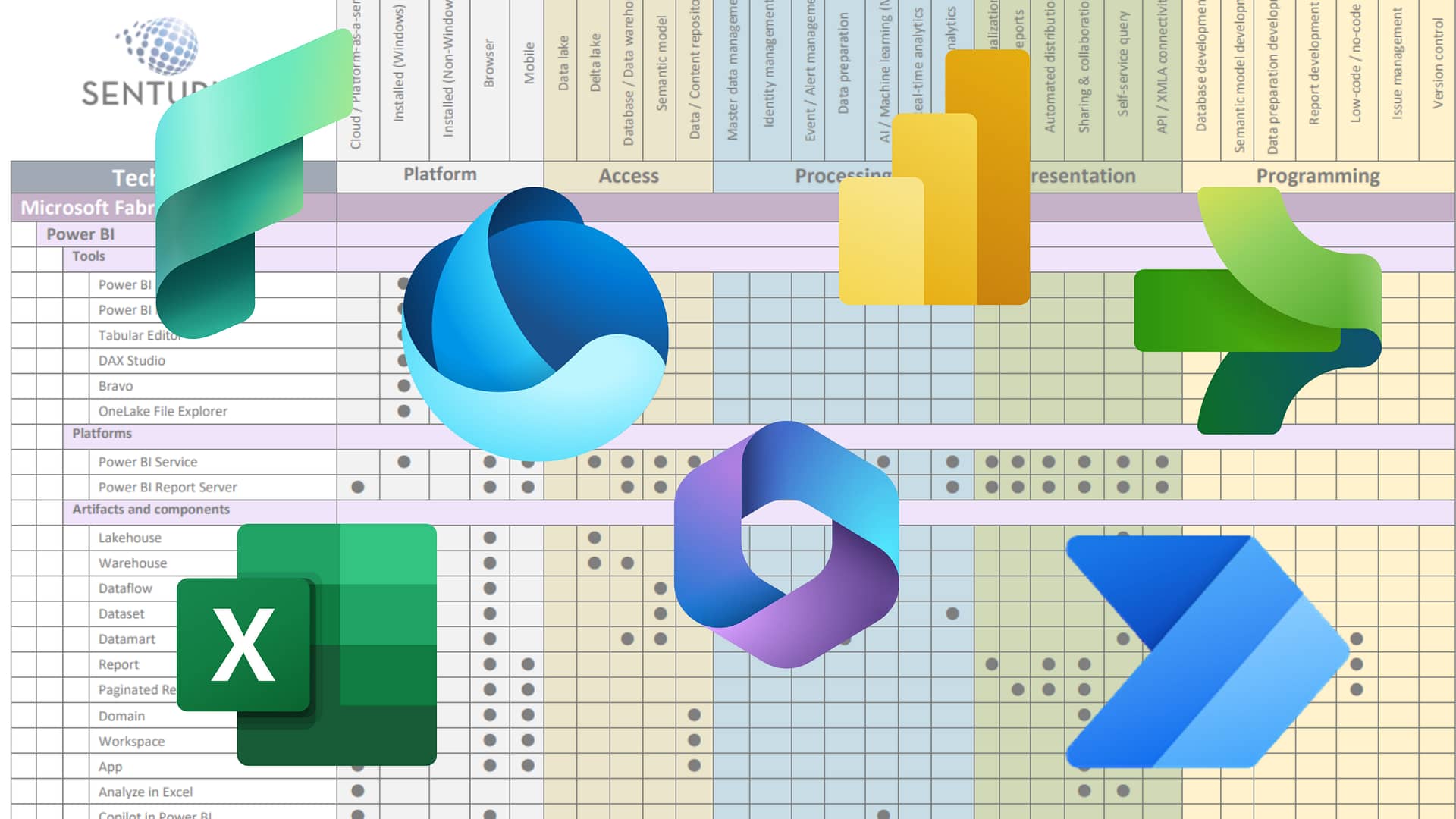

And so, the first thing that a lot of organizations do is they, they say, Well, what we need? We’ll just get a new tool, we’ve got an Analytics problem, let’s just get a brand. New Analytics tool will get Power BI, will look at the Gartner Magic quadrant, in the top right corner. Let’s look at, let’s look at Power BI, that’s the Microsoft solution and, you know, it’s exciting. And everybody’s everybody, you know, dives in, but they find.

9:15

And we see across organizations that the set of problems associated with this delivery doesn’t have that much to do with tool. In fact, when they get this new tool, they still find that they struggled to deliver. They still have those user expectation issues that the culture in the organization doesn’t support, doesn’t support analytics.

9:35

And so, then, it doesn’t really matter which kind of tool we have.

9:40

And even if you’re not in the Power BI world, you might be using any one of these other tools.

9:47

The problems associated with delivery are universal across many BI and analytics tools.

9:56

So, how do we solve it then?

9:58

The good news is that, because it’s A, it’s a problem associated with the way that we work our systems of work. Then we also have a universal solution across these tools. And, and, and we can look back to, not just in the BI industry, but start to then stand on the shoulders of giants and look back across other industries, and the way that they’ve solved similar operational issues.

10:23

So, we started out, looking in the eighties at the Toyota production system. Toyota revolutionize the distribution of the, the, the, the manufacturing of vehicles, obviously, and really became the market leader through, through this sort of Operational Excellence framework that they, that they developed. That sort of evolved into what we call the Lean and six Sigma movement that started to break out for manufacturing. And suddenly we move into, like, a bunch of different organizations.

10:50

And then, finally, we saw this adopted by our sort of friends over, over the fence, in the development space, where they’re applying DevOps principles to really increase the, the, the consistency in which they deliver code and the speed at which they can deliver new solutions.

11:13

And so, even though the DevOps and the BI industries or the BI development teams have a lot in common, and they often have common skill sets, the actual, the way that we deliver the idea of what we’re delivering is slightly different. And so, what we need to do is take some of the fundamental principles that the DevOps movement used and use, and then re-apply that, a, rethink that and, and rethink about how we can apply that to our BI development workloads.

11:44

So, breaking it down, what are the, sort of, the, the, the, the main, fundamental principles that the DevOps movement, and the Toyota production system, and lean used to really improve out the delivery of out of our development solutions. So, the first step in the Bay are in the DevOps movement. I kind of call this the three ways of DevOps, and there’s a book called the Phoenix Project. Many of you may have read, it’s about sort of about this sort of subject, and they break it down into these three ways. And so, the first way is about improving flow. And this is really about understanding our work flows through your development systems, and how you actually deliver work, and what are the steps that we take.

12:24

After we improve our flow and look for bottlenecks, and find out how to make it faster, we then want to improve the quality of that through what they call feedback loops. And this is around testing, and, and, and quality and security, and making sure that the people who are responsible for delivering things, have feedback in whether it’s going to work or not.

12:43

And then, finally, there’s this idea of safety, culture. And safety culture is really about two things.

12:49

And safety culture is about the safety of our systems, which is they, how robust our system is, and how resistant to failure it is. So, sort of system safety. And then also the psychological safety of our people. Which is really about ensuring that we’re, we’re building a culture where developers can give bad, give bad feedback to the boss, if you’d like tell them that is something wrong, in a safe way, that doesn’t cause a person to blow up. And everybody’s, you know, everybody’s blaming other people, and so we’re getting out of a blame culture, and into a safe system of work, where you sort of adopt a culture of continuous learning. Now, that culture of continuous learning effectively means that you out learn your competition and therefore become, you know, this sort of these, these sort of superstar development teams.

13:39

Well, I could talk for hours about all three of these subjects, and, and I’d love to, anytime you want to invite me. But, the, today, we’re just going to concentrate on the first thing, the thing that we can do right now, as development teams, the way just being introduced to this, we’re going to look at improving Flow.

13:57

So, the first way, really improving the, our workflow, our BI development workflow, kind of comes down to sort of four different areas. Understanding your audience, identifying your value strains, confirming those roles and responsibilities. And managing and improving the flow of work. So, we’re going to dive right in. We’ll get into understanding your audience.

14:20

Um, which is sort of the first step in terms of, and that really is the first thing that we do. When we come into a new organization and saying, Hey, we wanna look at, we’re looking at power beyond the Power BI, guys. So, I always work in the Power BI space, and so we’re looking at Power BI.

14:36

We want to, we want to adopt it inside of our organization. And so really, the first question we’re asking, is there, like, tell me about your users, OK? And really, understanding your audience is learn about learning about the what is valuable of what we do, whatever it is actually valuable and watchdog.

14:57

So, it’s crucial for analysts, every analyst. So, everybody who works in your data platform, or everybody who works on your data pipelines to have a clear understanding of what your users are using this data for. And so, if Jerry, the salesman is using data in his, I think I use Jerry again. I will use Simon the salesman. Simon is using his is presenting data to say your customer or end user, or then he has to have a really good, he has to have faith that, that data is correct. But we also, as analysts need to understand how he’s using that, so that we know what’s valuable to him and what’s not.

15:35

Really, as data, people, the way that I like to think of it is that now, in product, the thing, the thing that we actually deliver is inside, Right? We’re an Insight delivery business.

15:45

And so ultimately, the users decide, because they’re having the insights, if you like, they are the ones who are going to decide whether they can glean insight from your AI or not. Therefore, they will decide whether, what we do is valuable or not. And, therefore, you know.

16:02

No, OK, keep us around to continue lists, continuously, deliver more reports.

16:09

So, when we think about the different uses of BI workloads, then you can kind of break them up into some, you know, fairly common cohorts. And we tend to see a lot of the common patterns. You’ll see, there’s usually some kind of executive cohort where they are more interested in, say, KPIs. And the way I think about executives is always you know, I just want to say a petal of all green lights and then and then if one of the lights goes red, they want to be able to deep dive and say, why is that red? You know, that’s the, so it’s a, it’s a much more simplistic view. But the complexity underneath that is obviously, really high. So, it’s almost like the most simple of you, you want to give people, the more complex you have to deliver in terms of our data pipeline at the bottom. Because you’ve got to aggregate all this stuff up into like a one giant green or red KPI, God.

16:55

So, we’ve got that sort of cohort of users. Then we’ve got the analysts. They’re going to be much more interested in slicing and dicing data. That I want to dive in there, often on the end, on the end of the executive, saying, well, why is this? Why is this number read? And so, we need to give them solutions that more around, cuts, you know, cutting into the detail, getting down to row level data, and then being able to manipulate that and create their own insights inside of that. Now, they use, is a very different say, from a data scientist, A data scientist. You might just point directly at your data lake and say, have, at it, you know, like a data scientist. This is potentially going to be looking at your data at a very rural level, and then giving you sort of competitive advantage by giving you sort of predictive Analytics, or ML and AI solutions, or, you know, really deep, diving into data and getting insights out of data that you didn’t even think of. And so there needs that. When it comes to data and reporting, it’s a very different from the other two.

17:49

And then you’re going to have these sorts of casual, day-to-day operational uses, who are going to be using it to really look at their reports. But they’re not really, they’re not that interested in in slicing and dicing data. They’re more interested in the, in just getting the report and doing their day-to-day work. And then, of course, you’ve got your BI team and your developers who are going to have all sorts of different needs as well.

18:12

And so, by finding, but I think one of the things that we see when we talk to organizations that are struggling with data is that they don’t have a clear idea of who the users are. And BI developers aren’t always the most social people that aren’t often engaged with their users. And so, we want to spend as much time as possible trying to understand those user cohorts inside your organization, and how they’re going to use and consume the information. By understanding this, we start to understand the value of what we built.

18:42

So, one of the things that marketing organizations do when they’re building these kind of user personas, and you might want to go this far, or you might not often, it’s enough, just to go through sort of that first, that first slide that I had that. But sometimes, they build out, like, you know, more complex understandings of these users.

18:58

And when you go through this process, and you’re having these conversations with your users, with your, with your team, the team starts to have a common understanding of what sort of, Amy Jones, the fictional executive is like, Raji. She, you know, we she doesn’t really like it. When we give her a report that’s on a PDF files, you’d much prefer to interact with the report. But she wants only simple dashboards that have no KPIs and graphs on it. And you might have a template that’s specifically designed towards executive reporting as opposed to a template that might be designed to somebody else. And so, the more you understand about these uses, the more you know what their value is. And really the key questions. So, when you sit down, you book a meeting with your executives or with your people who are consuming or bullets. The key things you really want to understand is what are their challenges, and what are the, what’s they strike? What are the goals, right? And so, what’s causing the pain and what are they trying to achieve? If you can get those two things for each of your users, then you’re going to have a much clearer understanding of why, what, they’re, what they’re going to see as valuable in terms of your, in terms of your reporting.

20:05

So, quickly on engaging with our audience. And a few best practices for doing this, when we first go into like, organizations and do that, is having regular engagement, engagement meetings with stakeholders, letting their goals and challenges. As I mentioned, sit down with them. Book that meeting. What are you trying to achieve? How do you measure it? What reports are you using?

20:25

How do you use those reports? I think that’s a question that we see people not asking enough. Now is, is not just Often a BI developer will just be given as Excel spreadsheet, I want you to replicate this exactly, you know, in Power BI or Tableau or whatever.

20:40

And, and the BI developer doesn’t say, well, how do you use this report, How, what action do you take as a result of reading this report? And the more that you understand what the, the actions they tried to do, you’ll probably find, particularly with the new set of tools. Because our tools are always changing.

20:56

That then, the new set of tools will be able to deliver the same solution in, a much more easy to engage with way. Or you will have all sorts of different possible things that you can do. And if you understand what they’re trying to trying to do, and the team has that common understanding about the way the different user cohorts work, they’ll be able to suggest new things and actually generate whole new solutions Because users don’t know what they don’t know, right.

21:23

So, we’re trying to create that strong link between the, the BI reports that we build and the end user needs and goals and challenges. And by doing that, we can prioritize our solutions while we’re developing. So that if we know something that particularly executive or a particular, you know, operational manager is trying to achieve a particular thing. Then when they ask us for our report, we can prioritize the things that are most valuable in that report. Gives them that value early. And then if there might be other things that might take a little longer, we can we can build those things as we go and the person is still going to be happy, right, because they’re getting the main crux of the, of the value out of that report as early as possible.

22:05

So, we’ve gone through the process of understanding our audience. The next thing we want to do, and really, the meat of this conversation today, is around identifying and documenting our value streams.

22:20

So, really, value streams is understanding about how the work that we do flows through what I call our development system, sort of the series of steps that we take to develop BI and data solutions.

22:34

When I start talking about value streams, I always like to remind myself of goals law and John go, write a book called, it’s called System Antics back in the seventies. It’s now called the Systems Bible. And in that book, it’s a bit of an irreverent book about the way systems are constantly failing and all that kind of stuff.

22:50

But his law that came from this book, is that a complex system that works is a barrier invariably found, to be evolved from a simple system that worked.

23:02

And this is a really interesting idea when you apply it to the BI landscape, is that, I think, one of the mistakes that we make as BI developers, and I definitely make this, is that we, we build too much complexity into our initial designs. We say, Oh, we’re going to build this other cool report.

23:19

It’s going to have 30 tables in N And we, try and the final, those relationships. There’s actually, we’re almost always, when it comes to data, data platforms, and reporting, because of all the different steps that need to happen to get that data into the hands of the users were almost always dealing with a complex system.

23:36

And so we, the thing that I always try to keep in mind when I’m talking to people and talking to customers and talking to people who are going to build reports, is that we want to build that working simple system first.

23:46

And so he sort of goes on to say that a complex system designed from scratch never works.

23:51

You can’t design it completely with complexity and it can’t be patched after you try, right. You have to start over beginning with a working simple system. So, this idea of beginning with a simple working system, and then evolving it, rather than trying to build a complex system, and then patch it all up.

24:08

And so, definitely worth checking that book out if you’re interested in systems and entropy.

24:16

So, back to value streams. So, what is a value stream when we’re thinking about these complex systems, and the way that we develop BI solutions? So, the way that they define a value stream and the DevOps movement, is, I call, it, is from the aha moment so that the from concept, from the, from the, and often this is in the data, well, this is from the moment data gets entered into the system, but it might be. That went from the moment. The person comes up with an idea for a report or an idea for a KPI that they want to, they want to create. So, there’s a series of steps that happens. We do something, something, something. And then catching the CEO gets the, you know, beautiful pie chart and they’re making complex, you know, decisions around what they need to do. And making and getting, guiding that inside. So, so really, that value stream is, is around from concept through to delivery and so, what are we? What are the steps that we’re taking to do those things?

25:18

So quickly, just before we get into designing a value stream, and we’ll start talking about sort of the process of doing that. Why do we wanna use value streams? So, the first thing is, that it’s going to help your team.

25:28

So, your team’s going to have a common understanding of the users after going through the user process. So, now we’re going to try and build a common understanding of our systems of work, right? And so, this is, like, all the way through. You’re going to have lots of different people, and lots of different teams. Potentially touching your data as it goes through, and you’re building these insights, delivery solutions. So, you want to have a common understanding of what happens in wind, and so that’s, this is the way that we can do that.

25:55

It’s going to help us maintain consistency across different developers, content creators, and teams. It’s going to help us prevent pass issues downstream. So, if we know the next person downstream from us, I’m a data engineer, I extract data out and I put it in the data warehouse.

26:09

If I have an understanding of how analysts then query that data and model it for future, for downstream work, then it’s going to help me prevent issues being passed an X person, because I’m going to start to understand the dependencies of that person.

26:24

And so, really, where we see the big benefits in terms of, of trying to, you know, optimize our BI delivery, is around removing the barriers between teams. You know, like, it’s always, the communication between silos and between teams is where all the juices in terms of being able to build fast moving, data, analytics solutions.

26:47

It’s also going to help you demonstrate your value to the business, because you’re going to tie your value streams. They call value strings for a reason, because you end up with value at the end. And so that means that when someone’s that comes to you and says, Hey, we need to do this. You know, we’ve got a project around this kind of thing. You can tie the activities associated with that project back to a demonstrable piece of value. That the business rules will be able to sort of then conceptualize and say, Hey, will, this is worth this much money to us?

27:14

And that which is really, really important in terms of getting budget right, it makes it easier for us to estimate this work because we understand all the steps that need to happen and, and you have less of that where developers sort of forget, oh, whoops, I forgot that we have to do this, they 17 steps before we actually get to the modelling stage. And therefore, I did an estimate. That doesn’t take that into consideration and now we have to have a hard conversation with our end users about well, sorry about that. We forgot that. We have to, you know, do these extra things, and it’s going to cost you more money to get the thing that you want.

27:49

It also allows us to focus on optimization, instead of arguing about how it’s done. And so, we see that a lot, again. This is often when it comes to, you, might have different people inside your engineering teams, or you might have different analysts that have slightly different approaches. And if you come up with a common understanding of how you approach that work, you spend more time than focusing on, how do we make that better for everybody rather than I do it this way, and you do it that way?

28:16

So I really mean that. We have to keep simplicity in mind here, when we’re, when we’re coming into the world of developing these value streams. You know, like, if you sort of Google value streams and value chains and you get these sorts of complex diagrams in the supply chain world, but really, we need, we don’t really need to do anything anywhere near that complex. All we really need to do is first, just work out a simple understanding of our system of work. So, we just have a whiteboard session and say, Hey, what do we have to do to deliver a report? And so, your analysts might say, OK, well, the first thing I do is, first we query some data, so we go and get the data. And then, and then in the Power BI world, we, we, we model that data and then we do some decks in Power BI or calculations that we create some calculations. So, we sum up all the values, and do all the ratios, All the bits and pieces that we need for these KPIs. Then, we put them into a visual, So, we create our pie charts, and line graphs, and all those things, and then, we share that out to the organization.

29:16

And so, that’s the first version. But then, the engineering team says, well, yeah, that data doesn’t just appear in.

29:22

Now, in our data warehouse, by magic, there’s a few steps that we do that gets the data in there. And so, the first thing we do is we extract data from a source system.

29:31

So, you’ll need to, you know, like, if this was a brand new, never seen before piece of data that we tried to get to, then, yeah, we would have to run an extract script. That would run. And then we usually what we’ll do is we’ll store that in a data lake and then we pull it out of the data. Like we transform it, optimize it for analysis. And we put it in the dim layer into our data warehouse. And then you guys have the opportunity to query that data and do your modelling and tax and all the bits and pieces. So OK, so we’ve got sort of version two of our value stream there including sort of all the bits and pieces in the engineering, you sort of continue on this process.

30:03

Eventually you get to a point where you’ve you start to have a like a common stream of work. That has to happen for almost every insight you wanted to build, you want to deliver in your organization. So that often starts with the entry of the data by the end users then you might have you been watching the Centurion governance webinars from last month and you say, well, we definitely need to have some data quality checks, and we need to use our data new data quality tool that we just got to check the data entry point, then we extract the data. So that’s the engineering team, then pull that out. Then we want to have a security check because we’re worried about PII.

30:40

We don’t want to have personally identifiable information extracted out of our source systems without some kind of approval process. So, then, you’ve got a security check that happens. Then, you might store the data inside your data, like, then you transform and optimize it for analysis. Then you query and model it index. And then you visualize. And then you share it. But then you might schedule that to be refreshed.

30:59

You know, a lot of people forget about the scheduling step as well.

31:04

And so, you’ve, you go on and on and you go through this, and, every organization is different. I can’t give you a, this is how BI development value stream works for everybody, because everybody has slightly different needs, and yet, we also, you know, are going to build different, probably a few different value streams.

31:24

So when we’ve done, they sort of steps where we like listed out the steps, thought about the order of operations, if you like, in terms of development. We want to start looking at the timings between these two things and this really comes down to the ideal the ideal. What we want to do is shorten this, this value string to be as quick as possible. And when you start looking at timings of each of the steps, you’ll hopefully what you’ll start to see is where the bottlenecks are. And it might be that, let’s say, in this example, the security check, well, that security check, that’s a manual process right now. So the security team have to come in and extract that, get a copy of the script. And then they take three weeks to, you know, go over the script in a fine-tooth comb and pass it through best practices. And then I give it back to you, so, then you’re saying, OK, well, there’s a three-week bottleneck that we’ve got in our, in our digital delivery process that we need to, we need to think about that, Is that the, the thing that’s going to cost us the most time?

32:16

If we came in with a brand new, never seen before piece of work that had to be extracted from, it’s from its source system.

32:26

So, you go through that process, you’re going to, have slightly different value streams, you might find that different users have different entry and exit points for the value streams. Or they might have their own value streams altogether. So, your executive is going to probably go all the way through if they had a KPI that they need. And we didn’t have anything for that data set before. We’re going to have to go all the way back to data entry, and it’s going to go all the way through to that visualize and share sort of area. Whereas your data scientists, they might exit at the store, the store step, right, because you’re only just throwing it into data. Like for them, and then they go off, and like, they’ll have their own value stream in terms of developing, sort of analytic, like, advanced analytics, and then maybe piping that in. And so, you might have a different version of the value stream for data scientists than you do for executives.

33:16

So, what do we want to do in terms of delivering our value streams? We really want to gather as many stakeholders as possible into our value stream design meetings and, and so we want to socialize this value stream, this understanding of the value stream as far and as wide as possible. So, we really want to try and understand all those different people. And we’ll talk about people in a SEC in terms of roles and responsibilities.

33:41

But we just want to make sure that we’re listing all those steps from concept to delivery that are hard to catching kind of idea.

33:48

We want to have a look at the timings of each of those steps. Remember, it doesn’t have to be perfect. We just want to get a bit of an idea, so that we can start to focus on how do we speed this? This value stream up, how do we deliver, how do we deliver these faster. It’s all about throughput in the end. And so, we’re really looking for the bottleneck.

34:05

There’s a whole theory that we can use, and apply here, and some of you, or many of you probably have read that book and written in the eighties, called The Goal, which is all around theory of Constraints.

34:14

And that really, that’s the, what the DevOps movement, and a, and a lot of these other movements have used in terms of, first, first, principles, to go back and optimize the, the value of the theory, the speed of work. And it’s a great subject, just to talk about. Theory of constraints is lots of doom. Happy to answer questions about it later, if you like. And then, really, the goal is to increase the throughput of the system and the goal in the in the book. The goal is not to increase the throughput system, necessarily. I won’t spoil it for you, but it’s to make money. But the but really, the idea is that we want to increase our throughput as quickly as possible.

34:57

OK, so, Roles and Responsibilities. So, now, we’ve identified, we know what our audience, we kind of know the value of what we do. We’ve got an idea of how it gets there by identifying these value streams, looking at the steps it takes to get there. Now, we need to think about the people associated with that, the roles and responsibilities, in other words, who does what and when. So, when we look at these sort of version three of our Value stream that we’ve hastily thrown together on our whiteboard. You can see there’s a lot of different roles that are sort of coming into the value stream Now. We’ve got data entry is being done by the users. We got these quality and security checks done by the data Governance team. We’ve got our extract store, and Transform sort of stuff done by our data engineers. Then we’ve got some analysts work, who might be doing modelling and calculating tax. And maybe doing a bit of query worked himself. And then back to the users, because this is a self-service value stream, and we want self-service in our organization. So, they do that.

35:47

Users do the visualization and the sharing of reports, but then the data engineers getting involved with making sure that the reports that, you know, the data sets of being up to date, or maybe that’s the analysts, you know, and so it’ll be different for different organizations.

35:59

But you, you end up seeing that, like, it takes a village to raise report, right? And so, we’ve got a situation where we need to deal now with the challenges associated with having information passed through many different people, and everybody’s going to have different ideas. Is going to be what we call communication overhead. So, every step where it hands over from one team to another. Particularly, for organizations that are very siloed and functionally organized, they’re going to have to have some kind of communication between those teams. And so, we need to think you’re going to see that that handoff.

36:36

It’s going to be one of the pain points as you sort of understand this value stream that is going to emerge as one of the main things that you need to think about.

36:45

This is especially challenging now with Covid 19, and everybody’s working from home and you know, half of the people who are in lockdown And.

36:53

And so, this is even more of a challenge when it comes to delivering sort of BI solutions on a consistent basis, is because we’re, we have that, we were now not, we can’t even sit in the same room and, and have that kind of common understanding of what’s happening. And so, we have that soloing is even worse. And so, we really have to consider how this works and how we approach it.

37:18

Ultimately, the ideal here, what we want to try and achieve is, we want to just try and get everybody who’s associated with the value stream in the same room when it comes to delivering that project.

37:29

So, when it comes to roles, what we want to do is identify those roles that we need to deliver the value string, Right?

37:34

So, get those roles, understand who they are, and then when it comes to doing a project that delivers BI solutions, we want a cross functional team. That work together. And this is something that, I say a lot of organizations sort of struggle with conceptually is that it’s about getting the business user and the data engineer and the analyst and the person who enters the data. All in the same room, working on that project together. And you can second them into that team for the project. If it’s a big sort of mission critical project or you might just have them come and sit one day a week with people or have a, you know, a regular meeting where those people are providing that feedback.

38:10

And I need to have that constant feedback, because you’ll find that analysts they sit in there.

38:15

They end up in a little bubble and that will often stray into areas where nobody like it because there’s a lot of interpretation that needs to happen along the way. There’s a lot of exploration and then we make decisions with every development step as analysts. And a lot of those are based on assumptions that, if we’re not, we don’t have the person that asked, they were over, our shoulder, will go down the wrong path. And we see that a lot with delivery, particularly in the Power BI world, where it’s so easy to sort of throw a whole bunch of stuff on the page.

38:44

If you like that, people stray into this kind of into a slot, to an interpretation that, you know, I thought was right as the analysts but isn’t quite right according to what the business users or what other people?

38:57

This is also true for data. Engineers is, data. engineers will, will sort of, you know, create, create data sets, or they will extract data.

39:04

And some of it will be completely useless because they just didn’t quite understand what we meant from a business perspective or from an analyst’s perspective. So, we want to carefully manage those. Communication is and, and handoffs. Be hyper aware if you like, of who the roles are, and how they’re communicating with each other. And then the best thing that we can do, the really the best practice when it comes to value stream management, is having a, what we call a data product manager. Someone who’s responsible for that value stream, if you like. So, let’s say we’ve got to supply chain person.

39:36

Supply chain analytics that that supply chain and analytics data product manager, is going to be, is going to have ownership over all of the supply chain data. How do we get supply chain data? So, supply chains, maybe products coming in, and then how we distribute those products, and, and so, you’ve got, and then, yeah, what’s out of stock and all that kind of stuff. All of the data products associated with that, that data, the Product Manager for Supply Chain, is going to want to understand where they come from, and then that’s the person starts to see it from a strain point of view. Instead of from a sort of functional silo point of view. And so, when you understand that, and you understand those dependencies, which we spoke about a little bit earlier, you start to see the different roles and responsibilities, and how they interact with your value stream.

40:22

So, then, finally, we’ve gone through the process of sort of, understanding the value. We know those steps now, and how it sort of works, and we kind of understand who’s involved and, and sort of how to manage that. The last piece is really, then the day-to-day operation and management of these flows of work that we’re creating, or that we’re having with that we’ve identified.

40:44

So, it’s really important to, remember, like I said earlier, is that we’re an insight delivery machine. And so, this machine needs constant maintenance, so you need to be always thinking about this as an ongoing, as an ongoing concern. I think a big mistake that we see organizations who struggled with data do is that they see reporting projects as discrete projects. We’re going to stop reporting project. And then at the end, we’re going to deliver that report, and that report will always be correct forever, and nothing will ever go wrong with it. And that’s a, it’s a, conceptual mistake to think of it that way, because reporting is an ongoing, ever evolving machine that we need to maintain and keep up to date. And so, there’s a lot to be said, Justin, this pace, of course. I’ll stick to a couple of quick, we don’t have a lot of time left. So, stick to a couple of quick best practices that we see in the high performing teams.

41:39

So, the first thing, I think, the really the key thing that I see in high performing DI teams is around this idea of Dart, Boil the ocean work in small batches and deliver those insights really quickly.

41:52

And so, what we see where people struggle with data and this happens in engineering and analysts and in revolt builders, is that try and say, well, we’re going to build this sales reporting. And so, sales reporting is 12 different reports, and a triple it’s got 12 different pages. And then each page has got 18 different visuals on it. And so, you end up with this like huge amount of work. Did I sort of bundled up into sales report?

42:19

What you wanted to be doing as much as possible is breaking those down into their key components. And so, the way that I try to approach this with our team, when we’re delivering stuff, is that we want to be trying to build it. We break it down to the tile level. So, every one of our sorts of cards that we work on is going to be Individual piece of work And so you find that when you work at this smaller level, and then you’re trying to deliver quickly.

42:41

That means that I can deliver this one car to my end users They can be looking at that cod, so in this case total excluding tax and margin by invoice date so this is really easy to understand, right, I All I need is three measures the total excluding tax the margin and then on the invoice date column, right, so I’ve got three. so, it’s a three-column table to deliver this visual, so I can go and make sure those columns exist in my data warehouse. If not, I can go and ask the engineering team. Just give me those three columns.

43:09

And then, and then downstream of that, I can deliver this piece of work to my end users. Those end users can be looking at this, just this tile, and then I can be going back and working on the next tile. And so, you end up with a higher cadence of feedback from the users, because you’re delivering small pieces more often.

43:25

And so, and almost always, we see people just do the opposite. They just go, let’s bring the whole database in. And that’s a giant effort for the engineering team. And then we’re going to, we’re going to build a giant data model. And then, that giant data model takes a huge amount of effort to understand how it all works. And then, we give the whole report to the end users at the end and say, give, us feedback on this report.

43:46

And it’s like, well, this is a million different pages, and there’s too many things for them to understand. They have to reconcile it all back to their own, to their original reports. And so it just becomes too much, and you never get the feedback You need, you end up doing a whole bunch of rework because something’s wrong, and they didn’t notice it, or you didn’t notice it, or whatever.

44:05

The way that we’ve kind of tried to do this is we want to make work visible. So, we’re back at Jerry, the analyst. And we can see Jerry’s busy, because he’s got a bunch of paper on his desk, right? So, it’s easy to see that the work is that the work that he has, but we work digitally. We don’t have the opportunity to see when someone’s got a whole bunch of papers on the desk anymore. So, we need to be able to create a system where we make this work visible so we can watch it flow sort of through our teens and through the value stream.

44:32

So, the easiest way to do this and many of you will already do this, is using Kanban Boards.

44:37

So, get those sticky notes and stick them up on a piece of on a whiteboard or on a piece of paper. And I think my camera’s on, I’ve got a Kanban board behind me. I don’t know if you can say that with your view. It’s a bit dark.

44:46

But physical and digital Kanban Boards, both work and I work really well. The great thing about physical Kanban Boards is you can walk around the office and see who’s doing what and how busy they are. And that’s a really great thing from a management point of view. It’s also great for the team to be able to see who’s doing what and when.

45:03

Digital Kanban Boards are just as good. They don’t have that sort of walk around effect. But you have this common place where everybody kind of sees what’s happening, all the way along the value stream. And those teams can work together. And see who’s doing what and when. And so, Kanban is one of the main ways that you see. And obviously, you will see that in Agile and DevOps delivery workloads, Kanban are a big part of making that work visible and delivering that work.

45:29

Then, finally, just to wrap it all up, the really, the way that we measure these things, and the way that we want to try to improve the flow of our work. The three measures that really matter, and this is the, these measures are sort of first brought up in the golden book that I mentioned earlier, about the theory of constraints.

45:46

First is throughput, and I think of this as number of insights per day. And so, the way that you can quickly measure this as a manager, or somebody who’s observing stuffing the team, is that if you get an e-mail saying, hey, I need this piece of this piece of information. I need a new report that shows me number of sales per day.

46:01

How long does it take until the analysts can deliver that piece of information, A single insight, if you like.

46:07

And so, what I look for is that often that, and we’ll start out when we go in, is that might take them a week or two weeks, or a month to get sort of a brand-new inside, delivered to the user. And then, what we’re looking for is that no small batch, a number of insights per day goes up, we get this high cadence, because, so we’re trying to reduce the time. And so that throughput becomes really, really important. We’re looking for high cadence, high number of insights per day.

46:32

The second thing is cost per insight. Obviously. So, you want to be looking at the cost of the team and the tools and all that kind of stuff. And then how many insights do we deliver based on that cost?

46:42

And that’s going to give us, and we want to see that go down. Obviously, as time, as time goes on, and we improve, the number of the throughput, will be higher cadence and insights per day, reduce that cost, and then also cashflow, which is really about, when you’re dealing with a project. Your budget burned down, right? Or if you’re doing consulting. or your, you know, and now you’re dealing with consultants that maybe you’ve got an X amount per month that you can spend than making sure that you’re staying within those budgets. So, they’re the three measures that really matter.

47:09

There’s a bunch of other measures like, you know, vanity metrics and stuff that you can put around to make sure things are happening but they’re the ones that actually matter in terms of moving the needle, if you like, for, in terms of cadence.

47:25

And so, that’s a quick summary. It’s a bit quick file for introduction, I guess, to, to the first way, which is around improving flow. And so, if we can go through those things and think about those things in the context of your team, you’ll really, you’ll really see a huge benefit. This is something that I’ve seen over and over again. You see a big improve if: you can, if you can just do these first simple things. And that kind of puts us in a situation where we, we have a good understanding of how work flows through our system and how to make it faster.

47:56

That means that we can sort of deliver out our work more quickly, but it’s still maybe going to be wrong, and low quality and low security. And so that’s what we need to think about next. And so, we’ll, we’ll discuss that another time.

48:10

We can talk about feedback loops and safety culture. But, but, really, if you’re brand new to this, then the first step is around improving that flow.

48:21

And with that, that’s about it from me.

48:28

Yeah, so you guys, I’ve got my Twitters and stuff there, You guys got to catch up with me.

48:32

I do mentoring and Power BI adoption training in Australia.

48:37

And, with that, it’s over to you, Michael.

48:49

Great, thanks, Greg. That was a lot of information in a short period of time. So thanks for the whirlwind tour of that, if you want to move to the next slide.

48:57

And please stick around, get your questions answered. We will be covering as many of those questions as we can. So, go ahead and enter those in the question pane.

49:08

If your organization is looking to move from more casual analytics to enterprise grade analytics, you may want to take advantage of Senturus as we can help you make that move and achieve enterprise grade analytics, enable efficient, widely adopted self-service, and a data culture. So, contact us if you’re interested in learning about our adoption framework.

49:34

A quick few slides about Senturus before we get to some great upcoming events, and the Q&A is we are the authority of business intelligence.

49:43

We concentrate our expertise solely on business intelligence with a depth of knowledge across the entire BI stack.

49:50

We are known buy our clients for providing clarity from the chaos of complex business requirements, disparate data sources, and constantly moving targets. If you want to advance the slide there, Greg, please?

50:03

We have made a name for ourselves because of our strength in bridging the gap between IT and business users.

50:08

We deliver solutions that give you access to reliable analysis ready data across the organization, so you can quickly and easily get answers.

50:15

That’s the point of impact, in the form of the decisions you make and the actions you take.

50:20

As you mentioned, we do offer a full spectrum of BI services. Everything from the strategic to the tactical.

50:27

Our consultants are leading experts in the field of analytics with years of pragmatic, real-world expertise, and experience advancing the state-of-the-art.

50:35

We’re so confident in our team, and our methodology, that we back our projects with a 100% money back guarantee, that’s unique in the industry.

50:44

And, finally, we’ve been doing this for a while, we’ve been, at this for over two decades focused, exclusively on BI, working across the spectrum, from Fortune 500 companies down to the bean market, solving business problems across many industries, and functional areas, including the office of finance, sales, and marketing, manufacturing, operations, HR, and IT.

51:04

Our team is both large enough to meet all of your business analytics needs, yet small enough to provide personalized attention.

51:13

We’d like to expand your knowledge, you can go to the Senturus’s website to the resources area.

51:23

If you bookmark that and return to it, you’ll find hundreds of great free resources from webinars on all things BI to our fabulous up to the minute easily consumable blogs.

51:35

And along those lines, we also have a lot of great upcoming events.

51:39

We have three here that are scheduled over the next few weeks, couple in March, one in April.

51:45

One on data warehouse, comparing data warehousing comparing Snowflake versus Azure Synapse.

51:52

We’ll have a Power BI Query Editor Jumpstart webinar on March 17th. That’ll be on a Wednesday. So, that’s a little different from our normal times.

52:03

Normally, our webinars are always right around this time. And then, six ways to publish and share with Tableau.

52:10

And then, coming near the end of April, it’s not on this slide.

52:13

We are going to have a Cognos product Manager review the new features of Cognos 11.

52:22

I’d be remiss if we left out, that we don’t offer that we offer a complete array of BI training. Greg, if you want to advance the slide, to the next one there, on, all the three majors be.

52:32

The three top tier BI platforms, Power BI, Tableau, and IBM Cognos. We’re ideal for organizations that are running multiples of those platforms or those moving from one to the other.

52:43

We can provide training in many different modes. As you can see, we have tailored group sessions, mentoring, instructor led online, and self-paced e-learning and can configure that mix and match it to suit the needs of your user community.

52:55

Finally, additional resources.

52:57

If you go to our site, we provide hundreds of free resources on our website, and they’re committed to sharing our BI expertise of over a decade.

53:07

OK, and with that, we’ve got about seven minutes left here to cover some questions, and I’ve got some good ones here.

53:15

One of them is, this is a classic one.

53:18

I don’t know if you had a chance to look at these at all, Greg, but this gentleman is saying that they feel it, the business doesn’t have the time to work out the specific report or dashboard requirements with BI developers. So, they’re often left with incomplete requirements. Of what the business wants to visualize a report.

53:35

So, they’re asking for any tips do you have on in terms of what developers can do on their own, without those exact requirements?

53:43

This really comes back down to the value, Strange stuff, doesn’t it? Like, understanding, the value of getting the business, To understanding the value of what we do, and you’re right, The business is often just a spreadsheet off.

53:57

You go and not help provide that, provide that extra insight.

54:04

So, the way that we approach requirements to Watson, when we’ve got that, is that we try and break it down, too.

54:12

We don’t accept your spreadsheet until you give us a bit more background information and we’ve got a clear understanding of what our development value statements. So, we’ve got a set of steps so that we all so, we’ve kind of, we’ve got, we know. So, I can almost rattle them off. What I would, if you asked me for a report today, I’d ask you get a bit of background. So, I want to know the sort of operating environment, what’s happening in terms of how this is working. What are you trying to actually achieve? So, what do you, what are your desired outcomes? And then what are your objectives, which are two slightly different question. So desired outcomes are what are the good things you want to happen as a result of getting this report? What are your objectives is? What are you trying to, what are we trying to do to actually deliver this report?

54:54

We talk about interfaces, so we talk about what are the different systems and how they live?

55:00

And so, we’ve got a checklist that every single one of my developers has to fill out, before they can even start developing a report. And you have to hold the business hostage as much as you can before you agree to do anything. Otherwise, you’re right. They will just give it to you, and that will make a lot of assumptions that you know as much as them about how they use reports, how this whole system works.

55:30

And so, having a clear understanding of the different steps that are required to deliver any report, you need to try and capture that and as much upfront. That’s about not passing or not passing issues downstream. So, you got certain dependencies to be able to deliver a decent BI report, no matter which tool you use, you’re going to have a certain number of dependencies. You need know developer needs that a certain amount of insight into what that person is trying to achieve. The developer needs access to the data, obviously. And I need to be able to model it and do all the bits and pieces. So, it’s about refining your initial set of questions that no development can happen, and this is this.

56:18

You may need to get executive sponsorship to be able to support you with this. These guys are not going to do any development until you’ve answered the six questions. And then when you find that, you forgot to ask a question, and you have problem downstream, you go and add that question into your set of six questions, so that your next time you don’t have it. And that’s unfortunately, the only way to hold them hostage at the beginning.

56:43

Yeah, and that is a universal problem that we find, so you hit upon the key things.

56:47

Having that executive sponsorship such that they can’t just say

56:52

I just go build it anyway and I also think these tools now, it’s getting that culture.

57:01

You know, continuous improvement and iteration rapid iteration such that they you can present something to them and get input on it and refine it instead of going away for six months and throwing something over the fence at the end, that doesn’t?

57:15

I’m going to jump ahead a little bit here where the same for organizations that are heavily process oriented.

57:24

How do you get them to adopt a more Agile methodology?

57:31

Agile is definitely one of the one of the foundational principles of the whole DevOps movement obviously with the whole Kanban thing. and really by breaking that. So, that’s comes down to batch size for me, agile is about, the smaller the batch size, the more agile you are, so smaller, each piece of individual work that you give to a person, And, as it flows through, then if there’s a problem with the report.

57:57

Let’s say you’re trying to deliver a report that has 10 visuals on a page and so if you try to deliver that whole 10 page all 10 visuals on that page and then say you spend two weeks doing that and then you deliver it. You only find out it’s wrong at the end. When you deliver the whole page. So, you do the first visual?

58:25

Then you build that and then you deliver back to the users. You say you publish it and say, Here’s the first visual for the first page, Is that correct? Now, if there’s a problem with that visual, you’ll know straight away and you can adjust for all the other visuals, so the fundamental idea of agile is being able to move and pivot as we find issues by delivering in small batches.

58:47

Great. So, you’re kind of leading by example, if you will.

58:52

So, we realized that that’s sometimes easier said than done right. It’s like, oh, yeah, it’s an organization. I just sort of magically make that happen.

59:00

But that’s, you know, it’s sort of how you can maybe make it happen incrementally.

59:05

So, for the measures that matter, how do you know that you’re really delivering insight versus just incentivizing developing tons of reports. Yeah, And so that’s why value stream. Like, that’s why it’s called a value stream, right? Because we want to know the value, and that really comes down to understanding those users and understanding what they try to achieve, Because you’re right. You can like, and, and also do it. Like, it and I do, it is like, I’ll get a data set and I’ll just feel like I can make 12,000 pages of insights. None of them are going to be useful. Only 5%. You know, we’re going to be actually useful to the person who’s trying to achieve that actual thing, which is why it’s, it’s just so critical that you understand what these people are trying to achieve, and how that and what action that’s going to take from your reports. Because if you don’t understand that, you’re just going to build a whole bunch of crap, and send it to them, you know, And so, that, that’s, that’s really the idea. And so, again, the measures that matter, like I said, it comes down to those fundamental.

1:00:05

Those fundamental key things, and so from, for actual, our delivery workflow, those three measures, that’s actually pretty much all you need, just understand throughput cost per insight, and where your cash flows that. You don’t need to really measure anything else, from a flow point of view, and you’ll understand the white flows working. And so that’s the good thing, is, like you can kind of stand on the shoulders of giants and understand that these are the measures that actually work. And so, you’re going to be looking for that, those kind of opportunities across your organization.

1:00:36

Great. Yeah, Thanks for that.

1:00:38

We’ve had some questions about sharing the checklist that we have and we encourage you to reach out to us or someone was asking about it.

1:00:47

They’re using Power BI but they have to do manual data refreshes and what are some of the technical solutions to enable automatic data refresh?

1:00:55

That’s a fairly deep, complex question that we don’t have time to get into here today.

1:00:59

But certainly, you can reach out to us and we’d be happy to talk to you about that.

1:01:05

So, with that, we are just past the top of the hour, and we try to be respectful of our presenter and our attendees time. So, with that, we’ll go to the last slide.

1:01:17

And personally, I’d like to thank our presenter today, Mr Greg Nash, that was very valuable and insightful. I hope you all enjoyed it, and thank you, our audience for joining us today, taking an hour out of your day.

1:01:28

Hopefully learn a little bit.

1:01:29

Please reach out to us if we can be of any assistance to you in all things, analytics, so you can reach us via our website, via [email protected] on e-mail. And if you still actually use your phone as a phone, we have a 888 number there.

1:01:45

And we’d love to hear from you. So, thanks again, Greg. Thank you, and we look forward to seeing you on the next installment of the Senturus Knowledge Series. Thanks, and have a great rest of your day.