Despite significant advances in data architecture and data technologies over the last decades, data modeling continues to play a crucial role in attaining timely, meaningful analytics. If, how, and where data modeling is performed have significant implications to performance, data governance, cost and user productivity.

In the Azure data architecture, data modeling can take place at different points and across various components. It’s important to understand these areas and how to effectively apply modeling best practices to them.

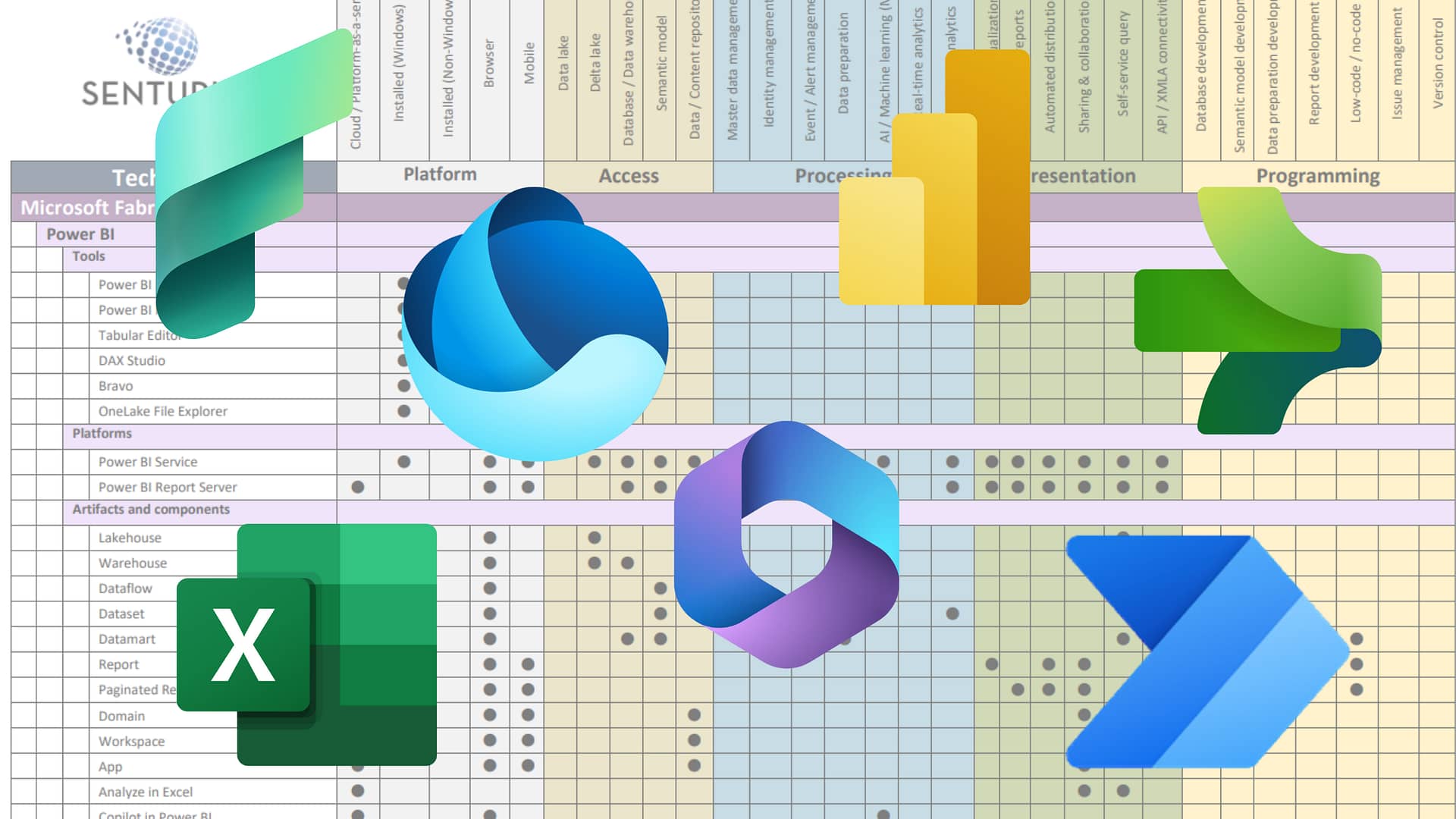

In this on-demand webinar, we explore and connect the different elements within Microsoft’s modern data architecture. We discuss where/if data modeling fits, the tradeoffs, and use cases within each stage of the Azure architecture.

You will learn about

- Different data modeling approaches

- Azure architecture

- Best practices for modeling by stage

- Source

- Data ingestion/data lakes

- Transformation layer

- Data warehouses

- Power BI visualizations

Presenters

Pedro Ining

Principal Business Intelligence Architect

Senturus, Inc.

Pedro is an industry veteran with over 20 years of analytics experience. He has been instrumental in architecting and implementing large-scale analytics systems, data models and data warehousing systems for clients in a variety of industries. Keeping pace with the constantly evolving BI industry, Pedro’s rich skillset includes Cognos, MicroStrategy, Informatica, Tableau and Microsoft Azure.

Steve Nahrup

Senior Microsoft Solutions Architect

Senturus, Inc.

Fluent in the Microsoft stack, Steve has more than a decade of experience overseeing the architecture and design of data warehouse, BI, reporting and dashboard solutions. Curious about new technologies, he is constantly downloading free trials of new platforms and arranging meetings with their product teams to discuss an ongoing relationship where he is granted a license free-of-charge in return for ongoing feedback and thoughts on the current state and future releases.

Read moreMachine transcript

0:11

Hello and welcome everyone to today’s Senturus webinar.

0:15

The topic for today is Demystifying Data Modeling across the Azure Architecture.

0:21

We’ve got a full agenda today.

0:23

We’ll start off with some introductions, discuss what is data modeling, data modeling myths and then where to model OLTP sources, the warehouse Data lakes.

0:34

We’ll touch on a little bit of additional resources and about Senturus and then we’ll get to your Q&A at the end to answer any questions that haven’t been answered in the chat.

0:44

Our presenters today are Pedro Ining.

0:48

He is a Principal Business Intelligence Architect at Senturus.

0:52

Pedro is a veteran data architect and his skills have evolved with industry through multiple iterations of BI platforms including Cognos, MicroStrategy and Tableau.

1:01

We also have Steve Nahrup, our practice Lead for Microsoft Fabric.

1:05

Steve is fluent in the Microsoft stack and has more than a decade of experience overseeing the architecture and design of data warehouses, BI reporting and dashboard solutions.

1:14

And I’m your host, Todd Schuman.

1:16

I have over 21 years of experience with analytics across multiple industries and I’m also Microsoft certified Power BI Data Analyst.

1:24

Before we jump to the content, we want to do a quick poll just to get a feed on today’s audience.

1:29

If you could take a second to answer the following question, we want to know where do you typically do your data modeling?

1:35

You do it in the Data Lake data warehouse, in the BI tool itself.

1:40

Go ahead and spend a couple seconds here just to click and vote on what you typically do.

1:45

This is a multiple choice, so if it’s more than one, feel free to click multiple choices here.

1:50

And I’m going to leave it open just for a couple seconds to get a full audience participation.

1:54

So we’re about 2/3 there, All right.

1:57

That’s pretty consistent.

1:59

We have a kind of a tie between data warehousing and BI tools.

2:02

So let’s go ahead and share the results.

2:07

So you could see it’s about 66% of you are doing a data warehouse.

2:10

The other 66% of you are doing it in the BI tool.

2:13

The data Lake is very small, about 4% there.

2:17

And we’ll kind of touch on these three different places in today’s webinar.

2:20

So thank you so much for your input.

2:25

That said, I’m going to turn it over to Pedro now to go ahead and present the content.

2:32

Thank you.

2:33

Thank you, Todd.

2:34

Make sure you guys can hear me, assuming that’s an OK, That’s a yes.

2:38

Good.

2:38

All right.

2:39

So data modeling is such a wide topic, right?

2:43

So let’s start the webinar a little bit with some definitions.

2:46

And as we get into this whole topic of data modeling for analytics, there’s a lot of different kinds of goals and organizations are trying to do, right.

2:54

Ultimately, our analytical kind of depends on we wanted to do analysis of data from disparate business systems, right.

3:03

So a business is producing a bunch of data and it could be maybe just a simple sales or the business which is producing data for sales and orders.

3:12

But increasingly, a lot of our businesses are getting data from Internet things type of data.

3:19

We’re getting data from Web APIs.

3:23

We get data, of course from our traditional ERP systems like Salesforce and Oracle.

3:29

And we have to make sense of everything, right?

3:31

We our goal is we need to extract some of all of that data and we want to integrate that data so that ultimately we can get a clear, concise and accurate delivery of reports and visualizations, so we can analyze the performance of our business etc and also show it may be in more visual format.

3:49

That’s really kind of our goal from analytics.

3:52

And on one side of benefit too is that we want to try to work towards a self-service environment so that we don’t really become a rapport factory, right.

4:00

We want to be able to empower our end users to be able to do analytics without a lot of roadblocks and things like that.

4:09

So those are some key goals.

4:10

There’s a lot more out there, but those are some of the key things that we want to do.

4:14

When we talk about analytics, data modeling for analytics, you can come up a lot of definitions.

4:20

Here’s one I think is pretty concise.

4:24

It’s a process, right?

4:27

We are, it’s a process used to visualize and understand the structure of information, support those analytic goals, right.

4:36

And the data architecture for analytics are all the things I’m a design and structure perspective of the data environment.

4:46

So that means the source data environment that your organization has, all the different systems, all the business processes that are producing that data, understanding all that, then eventually the technologies that support the data and analysts and reporting.

5:01

That means all your databases, your data warehouses, how you’re ingesting it.

5:04

Of course now we’re going to be talking a little bit more about the data architecture as regards to Azure, the modern data architecture if you will to support that and where the data modeling kind of goes and play and plays in.

5:17

So what is data modeling ultimately?

5:19

Yeah, we have a lot of source data, a lot of textual data coming in.

5:23

We have semi structured data, a lot of JSON file from us maybe because our businesses now are actually subscribing to web-based services, right.

5:34

And a lot of them are just API calls.

5:36

It’s no longer just simply relational structure data where we have the full ERP installed on our on premise systems and we can get the relation databases.

5:47

We still have that, but a lot of those systems are in the cloud too as well, but they still have relational models, right.

5:52

So we have all this data and what are we doing?

5:55

We need to again extract, parse and select the data from all those different sources semi and unstructured.

6:00

And ultimately what we’re trying to do is, we’re trying to get to really simplistically if you think about it, we’re trying to come up to a joinable structure.

6:09

We’re trying to get ultimately to get those measures from the source systems in a structured manner.

6:17

We want to come back to get dimensional elements.

6:21

And if you think of the old, well, not old, but I think it’s still very pertinent is the star schema A dimensional model?

6:28

And you could actually make this more of a logical thought process in a way because there’s a lot of places where you can do the data modelling.

6:35

And maybe we’re not implementing a really physical star scheme in a sense.

6:39

But in actuality, what you’re trying to get to, whether you do it in Power BI or data warehouse, you’re trying to Get the facts and dimensions, you’re trying to get to things you want to analyze and measure and you want to get to that by word, right.

6:51

You want to get back to, we want to analyze sales by customer by a certain time frame.

6:58

And even if you did, what’s new out there is this one big table approach.

7:02

OBT put everything in one big table.

7:04

Ultimately, those dimensions and facts are still kind of embedded in there.

7:08

So in a sense, we are trying to get to this point from a data modeling perspective, it’s not a webinar what a start schema is, but ultimately a business is measuring things, We’re getting facts, we’re getting measurements and we have things we need to group by and analyze.

7:24

So this is one way to kind of look at it.

7:26

Now if you think of the data modeling touch points, if we think of the reference architecture that we have nowadays, not just simply sources, data warehouse, BI layer, right, We have all these sources which have expanded on the left.

7:44

We’ve got log data and media files of course your traditional business applications, we’ve got sensor data, IoT, these things could be streaming in every second, right.

7:53

We got an ingestion layer now which we are doing in the cloud with potentially Azure data Factory data bricks for more advanced processing.

8:06

We have a data lake now there and we’re going to be probably producing other webinars specifically on the different components of the data Lake, right.

8:13

We have Azure Data Lake, we have Azure Data bricks, Delta Lake where we could do some modeling touch points as well.

8:20

The transform layer, you don’t think that there’s a modeling touch point in, but in a sense we are taking that data, we’re getting it ready, we’re staging it, we’re transforming it.

8:29

We’re modeling that data in a sense to get it ready for maybe consumption in the data warehouse.

8:34

Your traditional data warehouse, which now has a lot of different options because we have the traditional Azure SQL database and now we have the Synapse Analytics dedicated pool data warehouse used to be called SQL Data Warehouse for more bigger data sets, more compute power, massively parallel type of data warehouse.

8:55

And we have different ways of modelling in there in that concept too in terms of a star schema, one big table, you know, but you’re still kind of getting back to that dimensional framework.

9:04

And then of course you have the Power BI side of it too where we could do modelling in that side, we can model back to the source, we’ll talk about that.

9:13

But these are just a high level reference architecture.

9:16

Now we have where we have this data architecture in the cloud, all these different places where we can go to OK, some data modelling myths will kind of go through that.

9:28

So where you model, it’s not impact report performance.

9:32

And I mean the reality is you got to get the location right and you got to get your expectations right where you model within that data pipeline because you can do it in several different places now, for example, the data lake.

9:47

So the data lake could be your first point to where you’re getting your data in and maybe you haven’t had the time to pump it through the entire data warehouse architecture.

9:59

Data lakes are really good because they have high, you can put all kinds of storage in there, high data volumes, a mixture of unstructured and mixed structured data.

10:08

You know, one use case there could be you just merge with a brand new company.

10:13

And I need to analyze the customer list.

10:15

I need to analyze their sales.

10:16

They’ve given me these things and files, file structures, right.

10:20

And I need to get to it, OK.

10:23

I could put it in my data lake, I could model it a little bit there, maybe potentially organize it better, cleanse it, maybe slightly, maybe not depending on your use case.

10:31

If I got it today, I need to do it now.

10:33

I can do the modelling there, but maybe the report performance might be impacted because it’s not cleanse, it’s not highly performing, but I can do it.

10:43

So that’s one thing you have to think about.

10:45

Of course, the data warehouse you know best for relational data, that’s your governor’s source of data typically.

10:52

And then your BI system, all these things you have to think about because it’s controversial in the sense that the performance will be impacted depending on where you potentially model.

11:03

And sometimes from a resource perspective, the people that are doing the analysis might not know a lot about modeling efficiency, but they’re going to point right back to a lake house or data where a data lake or files in the BI system.

11:21

So expectations of report performance need to be managed as you pick the area where you want to model. Data Modeling is solely a technical task for IT.

11:35

So what this is trying to say is you know before there could have been a lot of organizations did have a dedicated group within IT typically that did the data modeling.

11:48

I remember the days where we had a data warehouse and before we could add to the data warehouse, we had to consult with the modeling group.

11:55

The modeling group had to sign off on certain things.

11:57

They did the modeling, they had the big ER diagram, they did the DDL, all this kind of stuff.

12:02

But in reality now in the new paradigm, you know, it’s basically a collaboration across both IT business analysts and end users to produce a functional data modeling.

12:16

It’s more collaborative in nature, right.

12:19

You still need IT for the infrastructure, but a lot of the cross functional benefits will be obtained by getting that synergy between technical feasibility and real world applicability, right.

12:31

So the business folks need to get to their data.

12:34

IT can help you to understand where that data can be modeled at, right?

12:40

And this collaborative effort is going to lead to more accurate user centric etc type of benefits, right.

12:49

So those are some of the reasons why it’s controversial.

12:51

You know if we restrict modeling to IT, it’s going to overlook those crucial business insights from that perspective we get these strategic misalignments if it’s totally devoted into IT.

13:05

So that’s something we always think about in terms of some of these myths, another myth you could do all the modeling in Power BI.

13:13

Well the answer is, you know you can, yeah, but do you really want to, right?

13:20

Power BI is very adept at doing modelling tasks.

13:24

Do you have Power Query in there?

13:27

But do you really want to try and build that star schema or maybe not necessarily use the word star schema but your dimensions and facts in Power BI, right.

13:40

There could be a lot of complex data transformations that are needed because ultimately the source systems are very complicated and what you’re doing is probably building a lot of that in the Power BI model and from a downstream perspective, right.

13:59

But a lot of that can happen upstream, right.

14:04

So the point of this slide is that, yeah, you can have really good time to build in terms of doing everything in Power BI, but a lot of the performance maximization can be done if you do a lot of the transformations and ETL upstream and leverage some of the resource up there Now.

14:28

So let’s talk about that.

14:29

We’re going to go through some use cases here.

14:31

We’re going to do a little demo and one area that we just talked about is where to model, maybe do we do this directly on the Power BI layer, the Power BI modelling, like half of you that says I do all the modelling in the BI tool, right.

14:47

So what does that imply?

14:49

That kind of implies the fact that you are going from your source systems, you’re doing like that ETL and ELT process directly into Power BI, right.

15:01

So some of the pros for that approach are things like, OK, yeah, I have a speed to report.

15:07

I can actually bring all my data and not worry about the stuff in the middle.

15:14

OK, I don’t have to worry about the humans, I don’t have to worry about the services.

15:18

I have full control.

15:19

I don’t have that intermediate step where I have to wait for a table to be created in dated warehouse or something to land on a lake.

15:27

I can go right to maybe to the files or the business applications that are out there.

15:32

It’s flexible, it’s user friendly, and it’s good in the sense that it could be an initial prototyping step, provincial, upstream changes, right?

15:41

So if you think about it, I have to add a column, but it’s not in the data warehouse.

15:45

But I need to do this calculation.

15:47

I need to get it over to the Power BI, I need to serve it up in a report.

15:51

I’ve done all the transformation and Power Query, etc.

15:55

I’ve got the steps I need to create that calculation.

15:58

Well, that could be a basis for eventually putting it upstream and having it part of your data lake or your data warehouse, etc, right?

16:05

But the con is, again, your source systems are very complex.

16:09

You might be spending more time trying to build dimensions and facts from your source systems as you bring it into Power BI.

16:18

OK, you’re going to be doing a lot of DAX.

16:21

You’re going to be doing a lot of complicated DAX in Power Query.

16:24

Model duplication is another kind.

16:26

So I’ve created my great Power BI model.

16:29

I had everything in there.

16:31

The guy in the other department is doing the same thing against the same source system.

16:35

He’s redoing the work.

16:37

Maybe he’s doing it slightly different.

16:40

Maybe he actually did it wrong and you guys go to the same meeting you have the you’re showing a report and there’s slightly different answers, right?

16:47

So that single source of truth is not there when you have multiple Power BI models, maybe multiple Power BI data sets out in the organization and of course maintainability, right.

16:58

So if you’re creating a very complex Power BI model, which is really based on a lot of complicated DAX and Power Query, are you going to remember that six months from now what you did, right?

17:10

Or if somebody leaves your organization and somebody else has to maintain it and they open up that Power BI model and sees all these complex transformations.

17:18

So those are some of the things that you have to think about when you do model directly in the Power BI layer.

17:25

But now I’m going to turn it over to Steve Nahrup.

17:27

He’s got to show a little demo.

17:28

Well, that kind of looks like one of the things he has to do if he has to do the modeling potentially directly in the PI and the BI layer.

17:36

So Steve out there.

17:38

Hello.

17:39

Yeah.

17:39

OK.

17:39

All right.

17:40

There’s Steve.

17:41

Welcome aboard.

17:43

Thanks, Pedro.

17:44

OK, here we go.

17:46

So the most common, one of these really complex sources that you know Pedro did a wonderful job walking through was taking a transactional type database such as an OLTP, ERP system.

18:03

And you can see we’ve somewhat simplified it based on color code and the objective would be to create dimension and measure tables based on you know, the color.

18:16

So the reason that you’re doing so, we’re just going to walk through quickly how you do this, like Pedro was saying, this is you really do not want to, you know, redo all of these complex transformation steps directly within Power BI.

18:36

It becomes very convoluted, complicated and it’s very hard to replicate across departments.

18:45

So what we recommend is instead of doing what you know doing here, highlighting all of these sales tables and then basically grouping it and creating one large table for the dimension you would like.

19:05

I was just saying you would just create a single fact table and we recommend doing it.

19:13

Either you can do it in Power BI like Pedro was saying in terms of you know, yes it gives the best business user flexibility and it just it doesn’t, then allow you to maintain the ability to scale across like department, division etc.

19:34

So instead of doing that, we recommend either putting you know, if your business user getting in touch with an admin, or you know the data steward of a specific data source that’s familiar with SQL, and testing them to create all of the necessary joins in a convoluted, complex data source like this.

20:00

And they can provide you with a single query.

20:04

Sometimes they’re really long and very complicated and they can actually end up looking like this.

20:09

So each one of these lines, when you see a joint, those are.

20:17

That would be the equivalent of adding two or three steps just to.

20:21

Merge tables just to pull in their mention for a specific column.

20:27

Very convoluted, very complex and way too much so we recommend pushing it up further upstream.

20:34

Either, you know, in the data flow, in the data like in the service so that other people can leverage the work that you did or pushing it even further upstream and creating a view in the database or a materialized table using a stored procedure or something along those lines.

21:00

Thank you, Steve.

21:01

So just to add on to that.

21:03

So what he’s trying to show here too is the fact that this is typically like probably like some sort of Oracle ERP application and they’re really just trying to come up with a fact table for PO facts, right.

21:13

And as you can see you’ve got about 20 joins in there, inner, left, right, outer joins just to get maybe the business requirements here and if you had to do that in, Power BI, that’s a lot of work, right?

21:25

I mean from Power Query perspective, even creating this particular view up on the source system and then having Power BI hit it embeds all this logic in one particular view and imagine changing this over time.

21:38

So this is really the point of Power BI going against the source system and then maybe even leveraging something else because you need to have Power BI more optimized.

21:49

The other area where we want to talk about now modelling Power BI against the data warehouse layer, right.

21:59

So in this particular example, we did build a warehouse, it could be a Synapse data warehouse because we have a very large volumes, it could be like we said in Azure SQL database, right.

22:08

We’re doing, they’re doing the ETL there and then you’re importing this data into Power BI.

22:12

So what are the benefits here?

22:14

Obviously this is the classic approach.

22:16

We all know about it, but it’s easy to use, understand that particular table count is much smaller.

22:23

So if you had about 50 tables from an Oracle ERP system that we just showed you how to join, after you’ve done all the ETL work into the warehouse etc, you’re down to maybe a five table count, right, faster queries.

22:37

And the key thing of it is, it’s governed data, right?

22:41

This has been done, it’s very structured, it’s been queued, it’s been signed off, right.

22:46

Obviously the cons are that it has more.

22:50

It has longer development time, right?

22:52

It takes a while to build to do this.

22:55

If you had to add 1 column, the changes also require more time.

23:00

And of course there’s an infrastructure expensiveness.

23:02

From a cloud perspective, there’s compute you have to work with to do this.

23:07

OK, so now Steve’s going to actually talk through this and do this.

23:10

Same thing with Power BI.

23:13

So, Steve, go ahead.

23:14

So Yep, so this is your standard star schema pulled from a SQL database.

23:20

This end result, what you see here is very similar, if not exactly what you want the model within Power BI to look like.

23:29

And that’s what Pedro was talking about earlier.

23:34

So the dimensional tables or filter tables are on the outside the at the point of the stars and you have your fact table directly in the center.

23:45

So here we’re just going to do what we did in the last one except we’re going to connect to it and we’re going to pull in all of the tables, the dimensions and the fact and luckily you know on in this database you have pre-existing primary keys and foreign keys within the database itself.

24:13

So we’ll see in a second why that’s beneficial.

24:17

But yeah, so normally you would have to bring in these tables that wouldn’t be connected or have any relationships whatsoever.

24:28

But even when you have to remember, even when tables do have like existing relationships, more times than not they’re somewhat accurate.

24:42

But you want to double check that they have a date needs you know needs to connect to all the dates in the table and just making sure that it’s one to many, not many to many.

24:56

You want to avoid that.

24:58

And so Yep, you can go ahead and OK, great.

25:06

So as you can see, right, the table count went down.

25:09

It’s a lot easier to model.

25:10

It’s easier for and used to use this particular approach and it puts all the lot of the business logic back up onto the data warehouse.

25:20

OK, we’re pretty familiar with that.

25:22

So the other one I’m going to talk about now which is kind of interesting to me is now we’ve got, it’s not you right, it’s been out there for a while, but maybe for a lot of organizations and we saw that from the poll only like 4% of you are actually in a sense modelling on the top of the Data Lake layer, right.

25:41

So with Azure and others other cloud vendors, right, we can store data on the Data Lake.

25:48

I just got Data Lake Gen.

25:49

2 here, ADLS if you want to call it from Azure.

25:53

We can point Power BI now to the Data Lake.

25:58

And for those of you who have been kind of kicking the tires on Fabric, that is their whole foundation piece in which now they’ve renamed 1 Lake, they’ve added things on top of that.

26:09

Parquet Files really pretty much make this the point here, right?

26:14

So here again the pros right?

26:18

You’re bypassing the ETL of a data warehouse scheme, right?

26:23

Fast development.

26:24

Since I’m bypassing start schemas and data warehouse, I don’t have to wait for the data engineers to get the data out of the data lake and put in the data warehouse and then have Power BI go against it.

26:38

Fast development in a sense, no compute resources, they’re very minimal, right?

26:42

Because what you’re doing, you’re paying for storage on a lake, right?

26:45

You’re having Power BI go against that this, the computer resources are nowhere near something like an Azure SQL pool, dedicated pool, right.

26:53

And like I said, and this is this whole concept now is even more prevalent with fabric, which we’ve got a bunch of webinars.

26:59

We’ve got a webinar coming down, our fabric coming out.

27:01

And this is what they’re kind of pushing in terms of a unified approach.

27:06

Some of the cons, well, the data, yeah, it may not be cleansed, right.

27:09

And depending on how you organize that data lake you, it becomes a data swap.

27:14

But maybe you have a very, organized data lake.

27:18

Maybe they’ve just done enough cleansing.

27:21

It hasn’t made the data warehouse yet.

27:22

But I’ve actually, I haven’t created maybe the files for fax and I’ve got my dimensions etc maybe but you have the risk of maybe going against not cleanse data, but maybe you have that usage scenario.

27:39

I need to look at it again.

27:40

Back to my scenario where you’ve just merged with a brand new company.

27:43

You need to look at that data.

27:44

I need to look at it now.

27:47

Depending on the file structure, you still could have potentially heavy modeling in Power BI.

27:51

Yeah, maybe the files are not just simply a customer dimension and a fact file.

27:56

Maybe everything’s all put together.

27:58

Maybe there’s things you have to cleanse and you have to transform.

28:01

You have to take it and have to create complicated DAX calculations.

28:05

I have to do a lot of stuff, empower our query to get it to look right.

28:09

And so those are some of the things.

28:11

But I think the key thing here is really I have data in the day lake and I need to analyse it.

28:16

I’ve got some sensor IoT data.

28:18

I can do it now and I can do it pretty efficiently now with the new Park a formats that are available in Data Lake.

28:25

So what I wanted to show here is just a demo and this is not a fabric demo, but basically it’s trying to show you how that kind of works now through the pieces of the architecture.

28:37

This is Azure Data Lake Data Explorer and what I’ve got here our storage accounts and I do have ADLS Gen.

28:47

2 account and I’ve kind of what I’ve done here is a scenario or use case to where I’m actually I’m going to a folder or a block container called star schemas and somebody that’s out there actually put some dimensions and facts for you.

29:00

I just want to show you the mechanics of how Power BI, it kind of does this, right?

29:06

So I’m going to push the little play button here.

29:07

And yeah, we’re talking about Explorer.

29:09

We’ve got a container, so let’s start schema.

29:11

We’ve got folders for dimensions and fax and maybe they are kind of cleanse, right?

29:16

I want to bring in my fax sales.

29:18

I’m going to go to here and I’m going to copy this endpoint.

29:20

I’m going to go back to my Power BI application.

29:23

I’m going to press get data.

29:25

I’m going to go ahead and look for the Azure ADLS Gen.

29:29

2 Data Lake Storage Gen.

29:32

2.

29:32

I’m going to connect to it.

29:33

I’m going to go ahead and paste that URL in right.

29:35

So if you I see 2% of you have not done this or 2% have only done this.

29:40

This is one way of doing it.

29:42

I’m going to.

29:42

I can see that fax sales CSV here now and it comes up with this query.

29:48

One additional step you have to do is instantiate that table.

29:52

It shows you that it’s pointing to the Data Lake storage account with a URL.

29:56

If I click on that little binary hyperlink, it actually loads the table and I’m just going to rename this guy Fax Sales, OK, And I’m going to go ahead and close and apply that Power Query transformation.

30:11

It loads it and now I have a new table here called Fax Sales with data inside it with all the different fields that were in that CSV.

30:19

OK, now I’m going to go back to my data explorer and I’m going to go in the Dimensions folder.

30:25

I see my files there and this way I’m going to go ahead and copy the URLDFS endpoint to the dimensions folder directly.

30:34

And the difference is it’s going to bring all those files in.

30:36

I’m going to show you how that works.

30:38

I’m going to connect and I’m going to put that URL in just before and I’m going to go in and I can see those files there.

30:48

Customer, date, product, I see those files there.

30:51

I’m going to say load and again I’m presented with the query.

30:57

The one little difference I’m going to say here is that I’m going to actually instantiate these actual separate queries or separate tables.

31:04

The way I do that is I right click on that, I say add as New query and I’m going to rename that to and load it.

31:10

I’m going to rename that to Dim Customer.

31:16

OK, rename the Dim Customer.

31:17

I’m going to go back to that reference query one and then do the same thing for each one of the other dimensional tables, right.

31:25

I’m going to add new query that’s my date dimension.

31:28

Say for example, load that, there’s all my dates, OK.

31:33

And that’s one of the common things that probably is going to be out there is a date dimension.

31:37

How much more common across an organization can you get other than a date dimension, the product dimension?

31:43

OK, add as new query and reload that, rename that.

31:49

OK, call that Dim Product and I’m going to go ahead close and apply the Power Query editor.

31:57

I’m getting rid of that query one because I don’t need that anymore.

31:59

That was more of a way to get these guys loaded, close and apply, OK, loading the data into the my Power BI model.

32:08

OK.

32:09

And I’m just going to organize these things.

32:12

What do we come back with now?

32:13

We’re pretty much looking like a relational data warehouse schema with customer, Product, date, fax, Sales.

32:24

Power BI has recognized that the keys are matching on both table and dimensions, so it kind of infers a join there date dimension.

32:31

I’m going to have to join that to that.

32:34

I’m going to do additional clean up on the Power BI side for renaming metadata, semantic layers, maybe add some calculations.

32:40

But the point of it is this demonstration is trying to show that I bypassed data warehouse.

32:45

I went to the data lake.

32:47

In reality, I’m pointing to CSV files.

32:50

I would have probably point to parquet files because the benefit of parquet files is they’re very highly compressed, they’re high performance, they have metadata there and data typing, unlike CSV files which you know.

33:03

If you load the CSV files you can get a lot of misconstrued data or errors.

33:08

But this was just from the point of an example.

33:11

If you point to parquet files, you’d have the same thing.

33:14

What you’ve got there basically is again with dimensions and facts from a data lake.

33:20

And so a little bit of Power BI modelling inside here.

33:24

But there was some modelling in a sense happening at the data lake layer where somebody actually organized it and created the facts, created the dimensions.

33:33

And this could be that use case where these are some files that I got from my new merged company, right?

33:38

And I need to analyze it.

33:39

So somebody went there and gave me the new customer list, gave me the new product list, gave me the sales from that company, put it together and I could do that.

33:47

OK, so let’s go ahead and kind of wrap this up a little bit here.

33:53

I’m bringing this back again, the reference architecture, because what we tried to show you here was all the data modelling touch points, right?

34:03

And in the data lake, which is a complete webinar on its own.

34:08

We have a lot of options to make that a really nice data modelling touch point which I tried to show you depending on how you organize and there’s a lot of ways out there in the industry.

34:18

They’re kind of referencing this lake, Delta Lake, Azure Delta Lake, Data bricks architecture is, if you curate it enough, you create all these different zones in your lake house or your delta Lake.

34:30

They’ve used concepts like bronze, silver and gold, which bronze is raw, Silver is cleanse, gold is curated right and you’re hidden.

34:38

Maybe the gold structure, you can use this as your an initial point for data modeling to bring it into Power BI right?

34:45

Like the bottom arrow is showing Power BI going against that gold structure right.

34:50

Then you have your other traditional places like you know your sit ups analytics, your SQL database.

34:56

This is just bringing home the different points.

34:58

So really what we’re trying to get to here is this is Roche’s Maxim that you’ve seen a lot of stuff that in social media, this guy’s name is Matthew Roche, he’s a Microsoft Program Manager with the Power BI Advisory Board.

35:14

He came up with this very nice maxim.

35:16

You know, it’s basically saying data should be transformed as far upstream as possible and as far downstream as necessary.

35:25

Upstream meaning it’s closer to the source of the data, Downstream meaning is more closer to the consumption of the data, right.

35:33

So although there are all these modelling points that we can now do in a modern data architecture, it’s always best practice to create all those calculations, those transformation points as far upstream, farther you do it even if you’re hitting the data lake, if you’ve actually done some transformation and you within the data lake you create a gold area where that stuff is ready for consumption.

35:57

It’s not ready to go into the data warehouse yet.

35:59

It’s ready for consumption.

36:01

If you do that there, the heavy work on the Power BI side is less and the possibility of errors with calculations is less.

36:12

The maintainability is better, right?

36:17

And as you’re leveraging the data architecture as much as possible, even though Power BI itself can do all those things, OK.

36:26

So I just wanted to wrap everything with that.

36:28

Up with that and next slide here, I’m going to turn it over to Todd, see where we are.

36:35

All right, thanks, Pedro.

36:36

Thanks Steve.

36:36

Lots of great information there for everyone still out there.

36:41

If you have any questions or need guidance on how to data model in your environment, please reach out to us at [email protected].

36:50

I’m going to touch on a couple additional resources.

36:55

Senturus provides hundreds of free resources on our website in our Knowledge Center that are comparisons.

37:01

We’ve been committed to sharing our BI expertise for over a decade.

37:04

So just go to Senturus.com/resources and take advantage of all this free information.

37:11

We’ve got some upcoming events that you might be interested in.

37:14

We’ve got a webinar on Accelerators for Cognos to Power BI Migration.

37:19

That’s on Thursday, November 30th, 11:00 AM Pacific, 2:00 PM Eastern.

37:24

You also have a new exciting webinar on December 7th, Microsoft Fabric Architecture or Marketecture.

37:31

We’ll talk about is the hype real?

37:33

We’ll touch on things like Data Factory, Data Warehousing, Data Sciences, One Leg, Power BI, a lot of good information in that webinar.

37:40

We also have an in person workshop on Microsoft Fabric TBD on the date and location for that.

37:45

So again, make sure you’re signed up for our emails and check out senturus.com/events.

37:50

A little bit more about Senturus.

37:55

We provide a full spectrum of analytic services and enablement and proprietary software to accelerate bimodal BI and migrations.

38:03

We particularly shine in hybrid BI environments.

38:06

So no matter how big or small your project, Senturus provides flexibility and knowledge to get the job done right.

38:14

We also have a long history of success.

38:16

We’ve been focused exclusively on business analytics for over 20 years now and our team is large enough to meet all of your business analytic needs, yet small enough to provide personal attention.

38:29

I am going to open it up now to some Q&A.

38:31

We didn’t get too many questions during the webinar, so if you do have questions at this point, please put them in.

38:37

We have a couple I can start off with here.

38:40

I don’t know if Pedro or Steve you want to feel these.

38:41

Maybe this first one is good for you, Pedro, since you’re also a Cognos guy.

38:45

Question is how does Senturus recommend we build layered models thinking Cognos Framework Manager model where we have a Power BI perspective.

38:57

Interesting.

38:59

I’m actually on a project right now where they are definitely using Cognos, but they’re leveraging the Azure architecture, right.

39:08

So and they actually created a Synapse data warehouse.

39:10

I don’t know why because it’s a pretty big footprint.

39:16

But they’re using that for data that might they might need to bring a large volume of data in.

39:23

But they’re using Cognos Analytics, and what I’ve seen done now is actually using more of the data module concepts within Cognos, right?

39:34

Framework Manager is great still being used, but for newer implementations, especially against like an Azure framework, using data modules I think gives you the best flexibility and I’ve actually just quickly spun it up and use it against that dedicated pool sit ups analytics and created dashboards with Cognos analytics against it real time without actually creating.

40:01

If you’re familiar with Cognos data sets similar to Power BI data sets, we can actually take it out and create parquet files within Cognos.

40:08

But the performance I thought was really nice.

40:12

We’re using a data module, so I think within the Cognos framework, especially for new implementations like that.

40:22

Cognos has mimicked a lot of the features set of Power BI and that they now you know for a while now they’ve created data sets, data modules, they don’t have the transformation capabilities like Power Query that BI does, right?

40:36

But I would start with using data modules against an Azure environment, whether it’s actually SQL Data Warehouse or cloud based databases, and I think you’ll find a pretty good performance there.

40:51

If you don’t, then using data module as a source, you can create Cognos data sets and instantiate those parquet files within the Cognos system itself.

41:01

So it’s kind of funny because in a way you’re kind of creating a Cognos lake, right?

41:08

Those are parquet files on Cognos, but you’re using the SQL Data Warehouse or dedicated Synapse SQL pool up there on Azure because they’ve created that architecture and you’re basically extracting parquet files and putting in Cognos.

41:25

So, long winded answer, but there’s a lot of ways to do that, a lot of interesting ways and new techniques really make Cognos shine even on top of a Microsoft Azure architecture.

41:37

So hope that answers your question.

41:40

Yeah.

41:40

And along those same lines, is there any sort of, you know, if you’re familiar with Framework Manager, different layers, like a database layer, transformation layer, business layers, Is there any equivalent that you recommend in Power BI similar to that?

41:57

Power BI Yeah, that’s a total different paradigm.

42:00

And what we’re referring to is within a Framework Manager, you typically set up a database layer and then a presentation layer.

42:08

I’ll let Steve answer a little bit of that.

42:09

But to Steve, you’re talking about how you basically you bring your tables in and then you create a semantic layer on top of that.

42:17

What’s the equivalent of that?

42:19

Just a star schema approach where pretty much bring it in and you create your relationships directly within the model view.

42:28

So not a ton of transformation in each table or in Power Query, but more so just it’s kind of in that end state star schema that you want to get to eventually.

42:42

Yeah.

42:42

So basically you’re you have to bring a lot of raw tables in.

42:45

But then maybe using Power Query you’re creating merged query subjects.

42:49

I’m trying to relate it back to Cognos Framework Manager, right.

42:52

You’re creating other views on top of what’s in there and re renaming the raw table columns somewhere in that in the Power BI framework, right.

43:00

We don’t have any other open questions right now.

43:03

Again, if we will prefer a couple of chat, Todd.

43:08

In the chat.

43:09

Yeah, there’s one in the chat I think.

43:11

Let me just double check.

43:15

It was from Duraid and the question was what’s the difference between getting Dim in fact from a container and from getting it directly from the Azure, getting it directly from the Azure Data Lake.

43:36

He makes the point that eventually you might be doing the modeling part at Power BI level.

43:41

Anyways, that was just really try to illustrate.

43:45

Maybe we didn’t talk about this.

43:48

We’ll probably have a webinar on this, how you structure a data lake.

43:52

And that was kind of an example of a very curated gold section where somebody has actually done that for you.

44:00

But maybe a lot of times you’re going after a raw file, one big file which has all the measures, your customer information, your product information, all there.

44:11

So you won’t have that luxury, right?

44:14

So if you’re doing that, you will have to do more modelling.

44:18

And within Power BI, because basically what is a customer dimension off one huge file, you’re doing a select distinct customer attributes from that and coming up with a customer.

44:27

How are you going to get the products out of a one huge file?

44:30

Well, you have to do a select DISTINCT and model it that way, right?

44:34

So you’re going to have to do a lot more work.

44:37

So I think we’re going to have a more detailed discussion on properly setting up a data leg structure for consumption.

44:44

And it’s usually divided into basically the raw area, maybe a bronze area where you have done some cleansing and a gold area where maybe it looks like that.

44:53

I have a folder where I have set up all the facts and dimensions and customers.

44:58

Because one thing from a even like a customer list, you might have in the raw area, customer extractions from five different source systems, all the different ways of representing customer.

45:13

And then you as a Power BI developer will have to bring all those files in and do some Power Query transformations just to cleanse it up because you’re going against the raw area.

45:22

But if you go against the gold area, maybe somebody has done an integration for you, gotten rid of duplicated customers.

45:30

Simply take taking lowercase to uppercase, fixing the zip codes, all that stuff you have to figure you figure out and do on a customer.

45:37

Just a customer dimension itself might be already in the gold area.

45:40

So that was basically the whole point of that.

45:44

Here’s another question.

45:45

So the point of using containers is to clean and simplify the views to be used in Power BI level, correct.

45:52

And yeah, going back to my last answer, yes, in a sense, it’s organization, right?

45:58

So and I think if you think of Data Lake as really just a bunch of files and Microsoft says, well, OneDrive has become one lake, right.

46:05

It’s really a way to organize your files, containers, think of them as just simply directory structures or folders, right.

46:12

Within the containers you have other folders.

46:14

So if you’ve got a container called raw, right?

46:18

That’s just really it’s your dumping zone, your every day you’re going out to a Web API call and you’re dumping all this data in the raw folder, hasn’t been even touched yet, hasn’t even been luck, right?

46:27

Another container will be called structured because you’ve taken that around and you’ve structured out for consumption.

46:32

But that’s where you have to leverage getting the best structure format for your lake for consumption by Power BI, because if you don’t, then you have more work at Power BI because maybe you’re going against the raw area.

46:50

All right.

46:51

Just a quick question on here about when we will be discussing Microsoft fabric.

46:54

There is that upcoming webinar on Thursday, December 7th.

46:57

Again, just go to our senturus.com/events and you can register for that.

47:03

All right.

47:03

You wrapping it up, Todd?

47:05

Yeah, I think that’s it.

47:06

So again, thanks everyone for joining.

47:08

We will post this webinar and the questions to be answered up on the senturus.com website.

47:15

So feel free to check back.

47:17

And thanks everyone for attending.

47:20

Thank you.

47:20

All right.