Is Microsoft Fabric the significant architectural advancement that Microsoft claims it is… or is it just the same old stuff repackaged? We’ve been wondering ourselves. Our analytics experts set out to sleuth the truth and delve into Fabric. Their mission: to understand the real-world implications and advantages of Fabric and report back.

If you are an IT, cloud computing or digital infrastructure professional and are wondering what Fabric really gives you, this on-demand webinar is for you. You will learn what distinguishes Fabric from conventional cloud architectures. Or not.

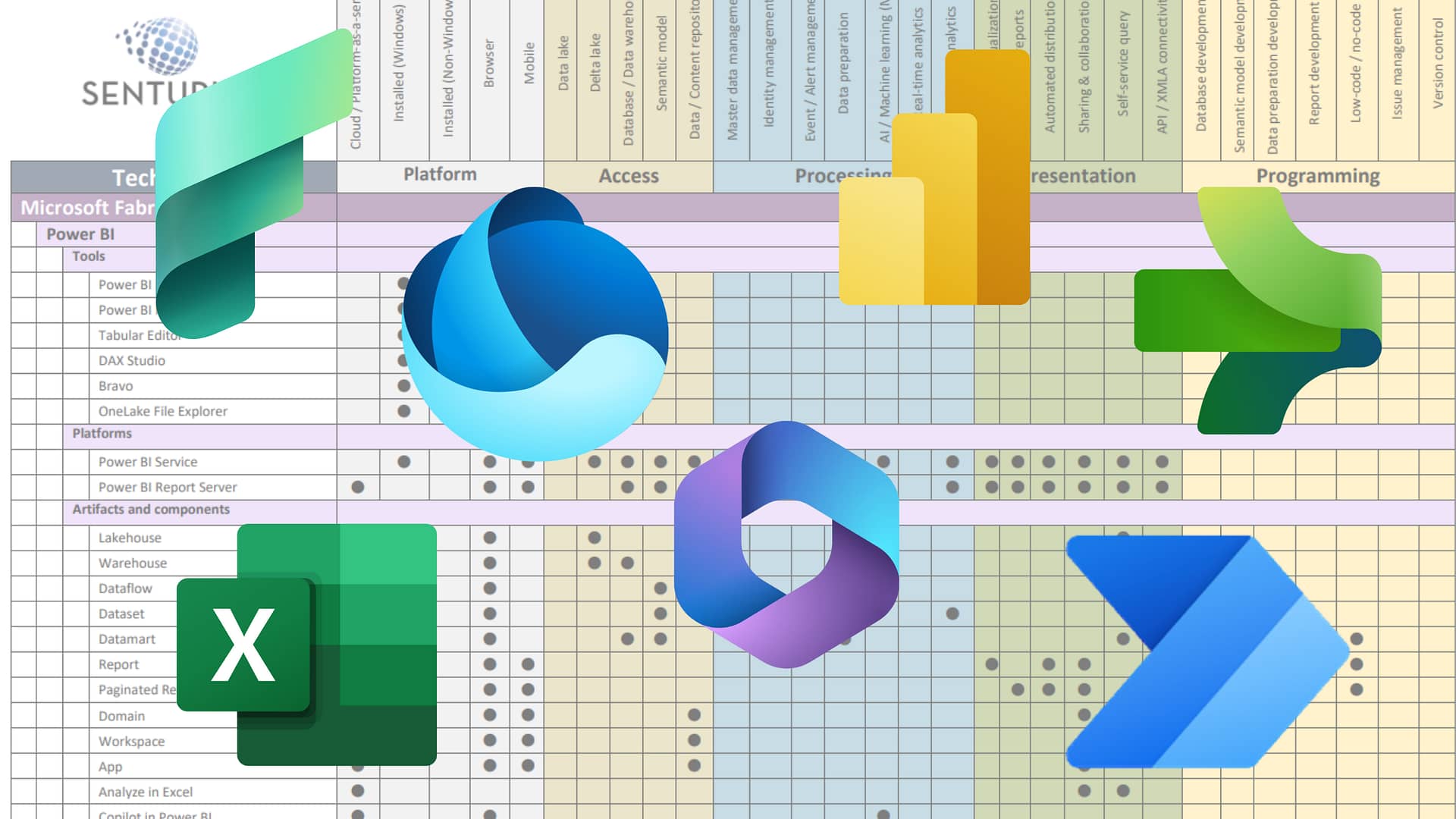

We explore

- Data Factory

- Synapse Data Warehousing

- Synapse Data Science

- Synapse Data Engineering

- OneLake

- Power BI

- and more…

Presenter

Joey Manning

Microsoft Solution Architect

Senturus, Inc.

Machine transcript

0:12

Hello everyone and welcome to today’s Senturus webinar on Microsoft Fabric Architecture or Marketecture.

0:20

Thanks as always for joining us.

0:22

Great to have you here.

0:24

All right, before we get started, a little bit about our agenda for today, we’ll kick off with some introductions, talk a bit about the road to Microsoft Fabric, its components and some reference architectures for the Fabric platform.

0:38

We’ll dive right into is this marketecture or architecture.

0:42

And we’ll wrap up with a bit of info about Senturus Plus.

0:46

As I said, we’ll do some Q&A at the end to answer your questions.

0:51

Some quick intros here before we get started.

0:54

Number one, we originally had Guy Wilani and I as our presenter.

0:57

Today we’re going to actually have Joey Manning, who’s a Senior BI Solutions Architect here at Senturus, as our main presenter.

1:04

But for those of you who came here to pepper Guy with questions, he is on the session and will be here for Q&A.

1:10

So no worries there.

1:12

But Joey’s going to lead the presentation today.

1:15

Joey has over a decade of experience architecting modern BI solutions, concentrating on Power BI, Looker and Tableau and now Microsoft Fabric.

1:24

He has a deep expertise in the Microsoft stack, which is why we have him here today for marketecture vs architecture.

1:31

Joey’s a self-described data geek and problem solver who seeks to foster innovation and streamline operations for clients.

1:38

He also recently became a Certified FinOps Practitioner at Incloud Governance Analytics to his already impressive skill set.

1:46

So, Joey, welcome and thanks for being here today.

1:51

As for me, I’m Steve Reed Pittman.

1:53

You’ve probably heard me here before.

1:54

I’m Director of Enterprise Architecture and Engineering here at Senturus.

1:58

I’m here for the intros and outros today, but Joey is going to do the bulk of the presentation.

2:03

And before I turn things over to Joey, let’s just do a couple of quick polls.

2:09

The first poll is what is your company doing about Microsoft Fabric?

2:14

And you know Microsoft Fabric is relatively new, it just recently moved into general availability.

2:19

So the question is are you watching and waiting?

2:22

Are you actively researching Fabric?

2:24

Are you in development with Fabric?

2:26

Are you really cutting edge and have some Fabric stuff in production?

2:30

And finally, are you just wondering what is this thing?

2:34

So I’m going to go ahead and wrap up the poll, share these results out.

2:40

You can all see.

2:40

So again it’s it was like a little under half of you were in a watch and bleep mode.

2:44

A fair amount of you were actively researching it.

2:47

So you’ve come to the right place and for a fair amount of you just want to know like what is Fabric?

2:52

So we’ll get into some of that.

2:55

I’m going to go ahead and stop sharing this poll.

2:56

Let’s just do one more quick poll before I turn things over.

3:00

Joey, second poll here is what are your biggest concerns about fabric?

3:04

So assuming you know a little bit about what Fabric entails, are you mostly concerned about administration, about the cost, about governance and or about security?

3:16

Let me go ahead and end this poll and I’ll share those results out for everybody.

3:23

So again, it’s pretty well divided across all of these categories.

3:27

A few more of you concerned about cost vs the other things.

3:31

But the good news is we’re going to talk about all four of these things today.

3:36

All right, Well, with that, I think I’ve done enough talking.

3:39

So I’m going to stop sharing that poll and I’m going to turn things over to Joey Menning.

3:47

Thanks, Steve.

3:48

So as Steve said, I’m happy to be here.

3:51

Thanks for joining us today and let’s jump right in.

3:56

So first and foremost, what we’ll touch on is at Senturus.

4:00

There’s a few problems that we sometimes see with traditional architecture, right.

4:05

So not utilizing Microsoft Fabric and some of the things we see is lack of data transparency across the business right there can often be several black boxes and finance and analytics and all these different teams, they might not know where’s my source data coming from or why is this transformation happening etc.

4:29

And kind of leading into that to the next two points IT, I mean that it’s only one team, right?

4:36

And sometimes IT they have a lot of things to do and it can really be a bottleneck for a business at times because they’re in charge of so many moving parts.

4:47

And then again, in conjunction with that, a lot of times there are very specific skill sets that are required for the traditional architecture at this time.

4:58

So you need your sequel experts, you need your PySpark experts and things like that.

5:04

And as we’ll see a little bit later, Microsoft Fabric can alleviate having to need those very specific skill sets.

5:13

Now another thing with traditional architecture is there can be a very long time to value when it comes to your data, right?

5:22

Because what do we want out of our data?

5:24

We want insights, we want actionable things to be able to make data-driven business decisions.

5:31

And with IT being only a singular team and needing these specialized skill sets, it can take quite a bit of time to identify sources, ingest sources, transform sources and finally get it to your report builders and your analysts and things like that.

5:50

So moving on to kind of in conjunction with data transparency, since there’s a lack of transparency across the business, there’s opportunity for there to be people playing with data and then having duplicate efforts, right?

6:07

There could be two different teams dealing with the same exact data and all of a sudden there’s report development and maybe KPI development that are intended to be supporting the same goals, but things are calculated and developed differently and inconsistent reporting the next bullet between groups can certainly cause some problems, right?

6:30

And finally, governance and security can be very complex with traditional architecture because in the events that your data is located in several different places, you’re using several different applications and you have to deal with who has access to this and that application, what is the security level for these users and that user and the governance and security can be very complex across the ecosystem that is traditional architecture.

7:00

So after talking about some of the problems we see from some traditional architecture, let’s go ahead and jump into a brief history of Microsoft and why we’ve landed in Fabric.

7:13

And the reason I want to talk about this is to help set the stage of what we’re talking about and why Microsoft has landed at Fabric.

7:23

So way back in the 80s, Microsoft entered the analytics field with Excel and eventually Sequel Server.

7:32

And then fast forwarding a little bit, we finally get the release of Power BI and Data Factory and then some other tools that support the goal of getting insights out of your data, right.

7:47

But, at that point in time, although they all supported the common goal, they’re all separate, they’re all they’re the applications are in different locations and you need specialized skill sets for some of these moving pieces.

8:03

So moving forward a little bit more, now we have Azure Synapse Analytics and this was really in our opinion, Microsoft’s first go at consolidating all of the moving parts to support reporting and getting insights out of your data.

8:23

This was their first take at trying to consolidate the landscape and but they were still separate, right.

8:32

They were still separate applications.

8:33

You still needed very specialized skill sets for some of these instances and some of these moving parts.

8:40

And now we’ve landed at Microsoft Fabric, right.

8:44

And as where Azure Synapse Analytics, it allowed us to build a platform to really expand and build our ecosystem that is our architecture.

8:56

Well Fabric is aimed to turn it into more of a software as a service, right.

9:03

So many of the things that we are already familiar with, data factory, warehousing, lake houses, things like that, it’s all under one roof now.

9:14

It is under one place, one browser experience and it really is a consolidated, tight, under one roof package.

9:24

So Microsoft’s goal to consolidate all of the moving parts to lead us to getting insights out of data as quickly as possible.

9:33

So now that we’ve talked about a brief history to support setting the stage, let’s talk about what we enjoy and what we’re excited about at Senturus when it comes to Microsoft Fabric.

9:43

So as was already mentioned on the last slide, it’s a software as a service and when you implement different capabilities like reports and semantic models and pipelines, you provision it once and everything’s managed by Microsoft.

9:59

You don’t have to create storage accounts or sequel databases or set compute sizes.

10:06

All of that is managed by Microsoft, which is a really awesome feature that alleviates a lot of legwork.

10:14

And then moving on beyond that, something that’s really interesting and exciting is that it enables citizen developers.

10:24

It empowers them to take on several of the roles that traditionally exist with either low code or often times no code experiences.

10:36

So your average user can all of a sudden be enabled to do the end to end process without the need for very specialized skill sets.

10:48

And then moving on to the next two points talking about the consolidation of things, the browser based end to end data analytics.

10:57

Right.

10:57

Again I talked about this and on the last side about history when we get to the end and it consolidated all of those moving parts into one browser experience, one roof, one software and what that also works towards is consolidating our data estate, right.

11:16

So if we have data in several different places, it can be a lot to keep track of.

11:24

And in the event one particular team or user needs access to several different sources and several different applications.

11:33

Microsoft Fabric aims to consolidate all of that and really make it streamline to be able to access all of your data in one spot and that just helps streamline things exponentially.

11:47

Now faster time to value once again what are we trying to do with our data?

11:52

We’re trying to extract insights right?

11:54

And the traditional process where again you have all these separate softwares and specialized skill needs, it can take a long time to get insights out of your data.

12:04

Microsoft Fabric once again aims to get to your insights faster.

12:10

It empowers so many users to do all of these different moving parts much quicker.

12:16

So getting the insights out of your data is much faster and it’s super exciting and then finally I’m going to keep using the word excited because Copilot is something else that enables to get insights out of your data quickly.

12:31

It’s AI integration that it can be utilized in several different places and in particular one of the ones I enjoy the most is analyzing your semantic models.

12:42

And when you create these models and you’re able to just communicate with Copilot and say hey, what is something interesting to pull out of my semantic model or eventually we can start getting more sophisticated.

12:55

We can say hey, I want to know very specifically what products we’re doing the best over time etc.

13:02

And it gives you a starting point and it accelerates that brainstorming process of right of what you’re really trying to get out of your data.

13:12

So moving on from this, what are some common questions that we’ve seen when it comes to Microsoft Fabric, right?

13:20

And at the end of the deck, I will close the loop to talk about these different points, but just for right now we’re going to pose them as questions.

13:29

Am I limited to Azure services?

13:33

Am I restricted to the box that is Azure and then kind of going in tend with that?

13:39

Can I access my data regardless of where it is?

13:43

If I have my data in several different databases and even furthermore different cloud providers, can I get that data into Microsoft Fabric?

13:54

And then furthermore the next two points, how does Microsoft Fabric address governance and security?

14:02

You know, because that again meant the traditional architecture can be located and dealt with in several different parts.

14:08

So how does Microsoft actually address that?

14:13

Moving on to a little bit more stage setting before we get into our demo, the Microsoft Fabric is broken down into a few different parts and one of them is experiences.

14:26

So those big icons at the top in our compute section, those big icons that those are considered experiences.

14:34

So those of us that are familiar with Power BI service, when you’re in Power BI service and you see the traditional screen and browser experience we’re familiar with, that’s called the Power BI experience.

14:53

And now what Microsoft Fabric has enabled is us to switch between these different user roles, right?

15:01

If I’m an engineer and I want to create a pipeline, well, I change my browser experience to Data Factory and now I can deal with several different tools that I’m familiar with as an engineer and not to go through all of them.

15:16

All of these different options are accessible right in the browser, right in Microsoft Fabric to be able to tailor to whatever your particular role is.

15:26

So beneath the experience level, all of these tiny bullet points, these are called capabilities.

15:34

So just to get our verbiage right, in the Power BI experience, if I create a semantic model or report, those are called capabilities and that’s the same as it goes under each of the experiences.

15:48

Now quickly moving down to the storage slide, we have different options for storage, right.

15:55

We have KQL databases, warehouses, lake houses, things that we’re pretty familiar with already.

16:00

And then there’s One lake, right?

16:04

And I feel like One lake can sometimes be viewed as this vaguely intimidating turn, like, well, what is One Lake?

16:13

And it doesn’t need to be right?

16:15

So the way that you can look at it is one Drive, something we’re all familiar with is to files as One Lake is to your data.

16:25

It’s just more comprehensive.

16:27

It’s your entire ecosystem.

16:28

Any data that you have, it can be stored within one lake and accessed that way.

16:33

So don’t let this term scare you, right?

16:36

OneDrive is the files as one lake is to your data.

16:40

Moving on to a particular architecture inside of Fabric that we would recommend.

16:48

I mean given a business use case, right, This is one example of an architecture that can be set up in Fabric and from left to right we’re going to break it down and then we’re finally going to get into the demo, right.

17:01

So over on the left, we see some examples of source data possibilities, right?

17:08

And we’re breaking everything down in layers.

17:11

So if we move to the next step, one option is to set up a pipeline to be able to ingest data and get it into the ecosystem right and start get ready to work with it.

17:23

So that’s what we’re considering this bronze layer.

17:26

And then moving forward from there, we have more options to be able to utilize pipelines and be able to pull that in and now transform it into usable data.

17:39

It’s no longer in its raw format.

17:41

We’re able to store it maybe in Parca delta format, which is incredibly efficient, right?

17:47

Moving on from there, once again, we can utilize different capabilities and we can create shortcuts which and pipelines to be able to get to our star schema part right?

17:59

And before we move on, I want I want to quickly touch on shortcuts and we’re going to actually see a shortcut functionality in the demo.

18:07

What a shortcut is, if you have data that exists anywhere, instead of ingesting data and duplicating it, you can just shortcut some sources.

18:22

So if you have data that already exists, instead of duplicating it and possibly causing changes that aren’t consistent, shortcuts allow you to just reference and ping that existing data, avoiding duplication and working with the source and then getting to our star schema.

18:43

Once again, we can utilize pipelines along with other capabilities to develop a star schema.

18:49

And again, all of this is stored in Parquet Delta format, which is incredibly efficient.

18:55

Moving on from, when we develop our star schema, we can go ahead and finally create our semantic model, right?

19:02

And we can do all of this in the browser.

19:05

I’m going to say that a few times throughout the presentation creating my model, it can be done right in the browser.

19:11

We don’t even have to open up Power BI Desktop.

19:14

We don’t have to do any of that.

19:15

It can be all be done right in Microsoft Fabric.

19:19

So one more step forward, we have our reporting layer right and we have several capabilities that are inside the Power BI experience, right.

19:29

But once we create our semantic model, we can actually start working with the data and pulling out our insights.

19:36

So having gone over this architecture, let’s go ahead and break it down piece by piece, because at face value, this can look possibly a little intimidating and there’s a lot of moving parts, right?

19:50

So let’s go through another example and really break it down piece by piece.

19:56

So starting at a ingestion level, right?

20:01

As I was stating earlier, we’re in the Power BI experience right now.

20:06

And before we move on, there’s a few stage setting things I want to get out of the way.

20:12

There’s a few capabilities that have already been created for this demo, those being namely A workspace that has Microsoft Fabric enabled.

20:21

We’ve created some pipelines, we’ve created some notebooks, and I’ve created the lake house.

20:26

So just to set the stage, those are some capabilities that have already been created for today’s demo.

20:34

So as I was saying, we’re inside of the Power BI experience and what we’re going to do is jump over to the workspace and one of the first things I want to do is jump into the lake house and I want to observe here that there’s no tables currently, right?

20:50

I want to observe that because that’s going to change.

20:52

And so before we move on, because once again, we’re in the ingestion process, right?

20:58

So there’s a few options I want to display when it comes to ingestion, and the first thing is a shortcut, so I mentioned that earlier where if there’s data that exists somewhere, you can just shortcut it, as opposed to possible duplication efforts, which we want to try to avoid, right?

21:14

We want one source of truth, so creating a shortcut is super straightforward as we can see there.

21:20

And then as an example, what we’re looking at right now is a shortcut to an Amazon Web Services S3 bucket, right?

21:29

So I have data that exists well outside of my Azure domain and I’m able to just reference those files directly, so I’m not duplicating them.

21:41

I’m not pulling it in and it’s just directly referencing those files, which is just awesome.

21:49

Moving on from there, we have one more section that has files within it.

21:57

Scoot forward just a little bit.

22:01

Nope, that’s too far.

22:04

Why are we jumping ahead like that?

22:06

Don’t do that to me.

22:11

Apologies for that.

22:12

I don’t know why that was jumping ahead.

22:17

I don’t know because my cursor’s been funny.

22:19

But that’s why of course it is.

22:24

Nope, that’s not where I want to be.

22:30

How goofy that my cursor is doing that.

22:36

Apologies for the slight technical difficulty here.

22:40

There we go.

22:41

All right.

22:42

So once again, we already went over the S3 bucket.

22:45

Now let’s check out really quick another place where there’s data that exists and we haven’t gone over how that’s ingested yet, but we’re going to very shortly.

22:56

So moving beyond this, let’s go ahead and head back over to our workspace.

23:03

And the way that this, the sales data is ingested is conveniently named ingest sales.

23:09

It’s a pipeline, right, a data pipeline.

23:12

And we’re going to go ahead and jump into there and look at the different moving parts.

23:17

And within a pipeline, what we’re going to see here is 3 activities is what they’re called.

23:26

Once that loads up, there we go.

23:28

And what this pipeline is doing right now is the first activity is really clearing out any files that exist.

23:36

It gives us a blank state and then the copy data section is where I really want to talk about the what the copy data is doing.

23:49

Let’s go ahead and jump over to source is what I want to look at beautiful.

23:55

So what this copy data activity is doing is it’s directly referencing a link in particular GitHub today and it’s pulling it’s pulling over and copying into our lake house a CSV that’s all it’s doing and mentioning of our lake house.

24:14

So that’s the source example right there.

24:16

And then we’re going to look at the destination, right, and the destination parameters, the destination selections, there we go.

24:27

So fair enough, we can see where am I going to land this data.

24:31

Well, I’m going to put it in the lake house and the lake house I’m going to put it in is lunch and learn, right.

24:36

And then something we may have seen in the lake house, we saw the files and then we saw a folder called new data and we saw a file called sales.csv.

24:46

So sure enough, this pipeline when you run it, which we’ll be able to see the results of that in a moment when you run the pipeline, it takes that data from the http://protocol, pulls it in, creates a folder and pulls in that CSV, puts it in that folder.

25:07

So let’s go ahead and see what those results look like once we run it.

25:12

Oh, before we move on, kind of a little bit of foreshadowing, right.

25:15

When we get past the ingestion stage, the we see a notebook here, right?

25:20

This is the last activity in this pipeline.

25:22

And what that’s doing is transforming the raw CSV, right.

25:28

But I don’t want to get ahead of ourselves.

25:30

So I’m going to go ahead and finish the ingestion process, right.

25:33

So we run the pipeline and sure enough, we see there’s three different succeeds here.

25:38

Wonderful.

25:39

It’s each of those activities have succeeded.

25:42

And now that’s happened, we can go ahead and hop over back to our lake house, which will happen in just a moment.

25:57

Come on.

25:58

There we go, moving back over to our lake house and then sure enough, that’s this is something I called out earlier, right?

26:05

There were no tables in our lake house and now there are and that’s actually what that notebook was doing, right.

26:12

So once again, what we looked at right now was just the ingestion, right, What we see at the top of the screen, just the ingestion.

26:20

And then to quickly review again, we saw that S3 bucket and then we saw this folder that was pulled in via the pipeline and the S3 bucket was the, the shortcut example, right.

26:31

So once again, bronze layer, step one, ingest the data.

26:34

So let’s go ahead and move forward and talk about the transformation of the data now, right.

26:40

So once again, we can see we have that new sales table.

26:45

And if we go ahead and talk about how it was actually transformed and the way that was done was with a notebook, go ahead and jump over there.

26:56

Eventually up top here we see open notebook.

27:00

And as I was saying earlier, there was a few capabilities that were already created and some, excuse me, some notebooks being some of those capabilities existing notebook and then conveniently we have one named load sales.

27:19

Go ahead and open that up and check that out.

27:23

So what we’re going to see here is some Pyspark great and this is an opportunity really quick to talk about for those that are familiar and comfortable working with Pyspark or Spark SQL, this is an opportunity to use that right.

27:42

If you’re comfortable working in notebooks in this environment, it’s an option for you to start doing transformations and working with your data.

27:50

Now, we’re not going to go through all of this code, but in particular I want to point out two points.

27:56

And the what’s highlighted right now it’s taking the data in our lake house in that directory, right, The files, the new data folder, and then the wild card CSV which is grabbing everything that’s in there.

28:10

And then the rest of the code is doing some transformations.

28:13

The other thing I really want to point out is where it’s writing the data to the lake house, which will be just a little bit forward.

28:23

There we go.

28:24

So now we can see this part of the code is writing the table to the lake house and that’s how this new sales table was created.

28:35

And again to kind of close that loop from the pipeline, this is the notebook that’s being triggered, right.

28:41

So that pipeline ingests the data into the files into our lake house and then the notebook is triggered and loads the sales to new sales as a table.

28:57

Now as another example of utilizing a notebook, I want to show transform S3 date is what I have called today.

29:08

And if we look at this one, I wanted to take an opportunity to talk about how we’re not only working with files that can be loaded through pipelines, but this if we keep it.

29:19

If we recall, is the short cutted file right?

29:24

So I’ve shortcut to an S3 bucket, I see those files, I’m directly referencing them, I’m not copying the data and I’m able to work with those in a notebook.

29:37

And we can see here this is the file directory to the S3 shortcut.

29:43

And then down below, we’re going to go ahead and write that as a table so that we can work with it in our lake house.

29:53

We can see down below, after I run the notebook, there’s several succeed statements, which is wonderful.

29:58

And then if we refresh our tables, fair enough, we see our oh, did I scoot backwards?

30:07

I totally did.

30:08

I don’t want to scoot backwards.

30:09

I want to see that table creation.

30:16

Did I go back a slide again?

30:19

I don’t think so.

30:24

I did.

30:24

I went earlier in the video, almost there.

30:30

There we go.

30:31

So I refreshed the tables in the lake house after running the notebook, and sure enough, it took that file and that’s being referenced in the shortcut from the S3 bucket, transformed it, loaded it as a table, and now I can start working with that file.

30:48

All right.

30:48

So once again, what have we gone through so far?

30:51

We’ve gone through ingestion and now we’ve been through some examples of transforming your data.

30:57

So what’s next?

30:58

Now we’re going to talk about creating our not semantic model, that’s afterwards our star schema.

31:05

So how did we approach that today?

31:08

We utilized views and we can see up in the right here.

31:12

I’m going to head over to the sequel analytics endpoint and to touch on that really quick.

31:18

What is that?

31:19

The Sequel Analytics endpoint is something that’s created by default when we create a lake house.

31:30

Going back a slide because I skipped forward again having such issues with skipping through these.

31:37

All right, boom.

31:40

So we are creating a star schema and we’re in the Sequel analytics endpoint, which is created by default when you create a lake house.

31:49

And what we can see on screen right now is a very familiar experience to working with data on certain applications and utilizing Sequel.

31:58

So we’re able to query the data that we have in our lake house.

32:03

And for those of us that are familiar with Sequel, we’re able to do that.

32:06

And again what we’ve done today to create our star schema is utilized views and in particular I’ve created three different tables here and just to get a peek into one of those views, the customer table, we can see the sequel behind the scenes, right and it’s coming from the new sales table which is kind of our starting point table, right.

32:28

And then we parse it apart into different tables to create our star schema.

32:33

So that’s how we handled creating our star schema.

32:37

And then the next part is creating our semantic model, which is exciting.

32:43

Now conveniently enough, inside of our lake house now no longer our sequel analytics endpoint.

32:51

Back in the lake house we conveniently have a button New semantic model right?

32:56

And then this will open up a few Wizards to be able to name our model and then importantly select the tables we want to work with, right?

33:06

So I hit confirm and then sure enough, it brings us to an interface that is really familiar for us that utilize Power BI Desktop and right in the browser.

33:18

Again, I said I’ll repeat that a couple times, Everything we have done is in the browser.

33:23

I’m able to create my semantic model, all of my relationships and I’m even able to make measures in here.

33:32

And this is all within Microsoft Fabric.

33:37

So again, what we’re seeing on screen right now creating our semantic model and sure enough, this can now be utilized for reporting purposes.

33:46

And then let’s finally talk about that step, right.

33:50

We have our semantic model, new report is conveniently located in that interface.

33:56

And here we are to an experience that many of us are familiar with.

34:00

If we utilize Power BI Desktop, this is just like that application.

34:05

You’re able to build your reports, you’re able to utilize several different things that you’re already familiar with and a little bit of a sneak peek into Copilot, right, because that’s one of the things we’re super excited about.

34:17

So I’m opening up Copilot gear over on the right.

34:21

We can see something pops up for Copilot, and I’m just going to say, hey, suggest some content for my semantic model, right for my report.

34:28

It’s going to do its magic and provide a few suggestions on what might be interesting for our data and skip back again instead of hitting pause.

34:48

So after it creates that, I’m going to say, OK, sales performance by product, let’s go ahead and check that out.

34:53

And it just creates that report for us from scratch.

34:57

And it’s fast.

34:58

So is this exactly what we want to look at if we’re building our reports?

35:02

And maybe not.

35:03

But for brainstorming purposes, it gives us a start.

35:06

It gives us a layout, It gets our brain churning to be able to see what type of insights do I want to pull outside of my data.

35:15

So before we move on and then get towards our wrap up, let’s talk about everything we’ve been through.

35:21

We talked about ingestion of the data.

35:23

We’ve transformed it with a few different notebooks.

35:27

From that point we utilized views to create our star schema and then finally we created the semantic model right in the browser and our reporting right in the browser and then also a little sneak peek into Copilot beautiful.

35:42

So that concludes the demo and earlier we had questions, right.

35:47

Are we restricted to Azure etc and this is going to be me closing the loop and really talking about is it re architecturing of the data analytics space or is it just marketing hype, right as it goes for MI restricted to Azure.

36:04

And the answer, yes, you’re restricted as in everything runs on Azure, but you’re able to access your data that is outside of Azure.

36:16

You can mirror different platforms such as Amazon Web Services and Snowflake and Mongo DB and we saw an example of a shortcut, right.

36:25

So I’m absolutely able to reach out and grab that data and we at Senturus really believe that is a re architecturing of the landscape.

36:35

It’s not just hype.

36:37

You really can re architecture what we’re traditionally used to and now as it comes for governance and security where we’re going to go ahead and stick with the stance that at this time it’s marketecture, right because, we have to imagine with all of this capability and this empowerment of users of even the average user that isn’t necessarily an expert in all of these different fields.

37:08

That demo I was able to do all myself.

37:12

I didn’t have to rely on any other teams, any other specialists.

37:15

I was able to do that entire experience end to end.

37:19

So that really opens up some ideas of how is governance and security handled, having one user empowered to do this entire process well, it might not be the set up that you want, right?

37:34

Having said talking about governance and security, it’s a little bit of out of scope for this, for this webinar, but it’s something we can certainly touch on in the future, hence the stay tuned, right.

37:47

So again that concludes our demo.

37:49

That concludes our final take on those questions we brought up earlier in the slide.

37:54

And Steve, I’m going to go ahead and throw it over to you now.

38:00

All right.

38:01

Thank you.

38:01

Joey, just a quick summary here before we lead into our wrap up and a little bit of Q&A.

38:08

Just in summation, you know is fabric marketecture or architecture.

38:12

Joey just addressed some of that.

38:14

Ultimately, our conclusion is Fabric is an architectural leap forward.

38:18

Certainly there’s more to come and Microsoft has a lot on the road map for fabric.

38:23

But we do believe that fabric is a great architectural step forward.

38:27

Of course, it does have a lot of moving parts and Joey addressed some of that.

38:32

But the reality is Fabric can accelerate your time to value for a lot of use cases and some of the key use cases that Fabric can be useful for and include Healthcare m&a risk management, really any kind of rapidly scaling business activity.

38:46

It can really benefit from the ability to quickly roll things out in Fabric, where in the past used to have a lot more moving parts and separate parts.

38:57

So if you’re ready to check out Fabric, reach out to us.

39:00

We can help you kick start a Fabric POC, help you explore the possibilities of Fabric in your environment, and we can either do that set up within your existing environment or in a Sandbox.

39:11

So don’t hesitate to reach out if you’re interested in exploring Fabric further and you can get some time on Kay Knowles’s calendar.

39:21

Kay, I haven’t been tracking closely, but you’ve probably posted your link in the webinar chat.

39:26

If not, you can always reach us at senturus.com.

39:30

Just reach out to us on the website and contact us if you’re interested in exploring a fabric POC, a Little bit of just general detail about Senturus.

39:41

If you don’t already know, we’ve got tons of resources on our website.

39:44

Go to senturus.com/resources.

39:46

We’ll find tech tips past webinars, both recordings and side decks.

39:52

We’ve got a great matrix on the Microsoft stack and what features are available where, so check all of that out at senturus.com/resources.

40:04

Couple of upcoming events we want you to be aware of.

40:06

We’ve got a webinar in January.

40:08

I’m using live data from Power BI.

40:10

Within Microsoft 365 apps, a lot of people haven’t seen how cool and easy it is to actually embed Power BI data or now Fabric data.

40:19

I should say visualizations directly in Microsoft apps, so check that out January 25th.

40:26

We also are going to do an in person workshop on Fabric, so if you are interested in that, get us senturus.com/events so let us know.

40:34

The date and location for that workshop are still yet to be pronounced and from there we are Senturus Modern, BI, accelerated and accessible.

40:45

We’ve been in this business for a long time and we especially shine in hybrid environments.

40:51

Many of you may know that we’ve started out in the Cognos world and over the years have expanded into Tableau, Power BI.

40:58

Now Fabric, we provide a full spectrum of analytic services, including ad hoc services and advisory services to help you on your BI journey.

41:09

So reach out to us.

41:10

We’ve got the flexibility and the knowledge to help you get the job done right.

41:15

We’ve been in business 22 years now, over 1400 clients, over 3000 projects.

41:22

Some of you may recognize some of the logos on this screen.

41:26

They might be the companies that you work for.

41:29

Anyway, we’ve been in the business for a long time.

41:31

We are small enough to provide personal service, but also big enough to cover all of your BI needs.

41:38

And with that, we’re going to launch into a little bit of Q&A here.

41:42

Just a few questions have come through during the webinar, so I’m going to have, well, Joey is probably already unmuted, so I’m just going to go through here.

41:50

One of the first questions that came in was whether Fabric is actually generally available now.

41:56

And so just straight up, the answer to that is yes, that Microsoft announced Fabric moving into GA in mid November.

42:03

So Fabric is live and you can launch right into it.

42:09

We had a question come in about shortcuts and Joey, I know you did in your demo you showed an example of using an AWS shortcut.

42:19

I know shortcuts are also available to the Azure Data Lake storage.

42:23

Are shortcuts available to other locations or is it just is that the focus today of shortcuts?

42:31

I should say other platforms not locations.

42:34

So if I understand the question is can we shortcut to other places other than S3?

42:43

What do you think on Steve?

42:44

Am I understanding correctly?

42:45

Yeah, so do now.

42:48

Again, I’m a little out of my element here, but so you showed the example of doing a shortcut to AWS S3 buckets and I know you can have shortcuts to Azure data like storage.

42:58

Are there other types of storage or other like kind of third?

43:02

I think the intent of the question was, are there other third party sort of data storage providers that you can create shortcuts to from Fabric?

43:13

So you’re able to pull data into one lake, right, Which is important.

43:18

And if you’re able to utilize say a pipeline to pull data from say another cloud provider as an example, you’re able to pull that into one lake and then from that point you could shortcut the data, right.

43:31

So you can still have that centralization of the data when you put it in a specific spot.

43:36

And again, I mean one of the biggest functionalities is avoiding that duplication, right?

43:41

So there are ways to pull in other third party sources and still utilize shortcuts.

43:47

And if anybody else on the panel would like to add to that, by all means, yeah.

43:54

And this is guy.

43:56

In addition to ADLS and S3, you can also access Dataverse as well through shortcuts.

44:06

And then the latest feature that was introduced that was announced, sorry at Insight and is in the process of being introduced I believe this month is called mirroring where you can actually have Fabric do real time replication of your data from other databases such as Snowflake, Mongo DB.

44:30

And there will be several other relational databases from which you can pull selectively those tables that you’re interested in having housed in fabric and have that replicate on a real time basis.

44:49

Hey Guy, quick question about mirroring vs shortcuts.

44:54

So do we know yet in terms of performance whether there’s a benefit?

45:00

I the way you just described mirroring, it sounds like Fabric actually is keeping a copy of the data locally.

45:05

So I would think that would have better performance than shortcuts, which I assume are doing a kind of remote query to the data source.

45:13

But is that an accurate description of how those technologies work?

45:17

That is an accurate description of how the technologies work.

45:21

In terms of actual benchmarking, we haven’t seen major issues performance wise with shortcuts, but I do expect, as you said, Steve, that mirroring will be even faster than that.

45:36

Cool.

45:37

Thanks guy.

45:38

And that’s specific to the Parquet Delta Lake house connection.

45:43

So they don’t, that’s what they’re boasting as three behind how they’re able to do that at such a high rate.

45:58

Does that make sense?

46:01

Yeah.

46:01

So I actually I have a general question for this probably is mostly to Joey and Steve just about the way that Microsoft has pulled everything together in the sort of Fabric Portal.

46:15

For people who have past experience with say Azure Data Factory or Synapse Analytics or you know all of the components that have kind of been incorporated into Fabric.

46:26

How seamless is the transition?

46:29

I mean is the interface basically identical or has it been kind of reworked to fit into this kind of unified workspace like how easy is it for somebody who has past experience to dive into Microsoft Fabric, right, yeah that’s a great question.

46:46

So I would expect the transition to be rather comfortable and I expect it to be really easy to do the transition the experiences that have been brought under the one roof that is Microsoft Fabric.

47:01

A lot of them are going to look incredibly similar to what we’re used to and that’s was Microsoft’s what we believe is Microsoft’s goal right to take the familiar experiences and just put them under one software as a service.

47:17

So I expect the transition to be comfortable and pretty straightforward, great.

47:25

And then since we had a lot of people in the poll, just kind of in that what is Fabric camp or kind of watching and waiting.

47:35

If I’m totally new to the world of Power BI and now Microsoft Fabric, what is, what would you say is sort of the learning curve?

47:45

Like is it pretty quick to get started or am I going to need to spend some time, you know, do a little bit of training like how sort of, you know, click to sign up and go how, how quick is it to actually get something to get value out of Microsoft Fabric as a totally new user?

48:06

So, you were saying even new to Power BI, is that right?

48:11

Yeah, great.

48:12

So I think Microsoft has really designed Fabric to be a seamless and intuitive adoption, right?

48:20

So if you’re familiar with Microsoft products, I think Microsoft Fabric is going to become very natural and one of the great things that Microsoft Fabric has done is what I mentioned a little bit earlier in the slide is that low code to no code experience, right.

48:37

So Microsoft actually has some phenomenal training resources on their own website and the adoption to be able to ingest to report the data because of so many low code to no code options and capabilities, I think it would be pretty quick to catch on.

48:56

It’s pretty intuitive especially if you’re already familiar with some basic Microsoft products.

49:02

All right, well thanks Joey.

49:05

So got a couple questions coming in here about pulling existing, I don’t know if I’m using the right terminology here, but I’ll say pulling existing objects and fabric.

49:16

So for example, if an organization already has a bunch of Azure Data Factory pipelines, do those just like import directly into Fabric or are they just visible directly in Fabric because they already exist?

49:29

Like what is the process for bringing existing content over to the Fabric world?

49:35

That’s an interesting question.

49:36

So if I understand correctly, say people are going outside of Fabric to where they have Data Factory right?

49:44

And are those easily portable over to Microsoft Fabric.

49:50

So there are some situations where you are able to pull out some type of template and quickly transfer it over to Fabric.

50:01

But at this point in time I would say that functionality may be a little limited.

50:09

So that transfer certainly I don’t think would be hard because the functionalities between the two platforms would be really similar.

50:16

But to my knowledge they don’t just plop over and before we continue, I want to make sure that Guy and Steve also agree with that statement.

50:31

So they are releasing like migration or import functionality so that you can seamlessly actually import the pipelines from adding to Fabric that is a feature that is going to be possible.

50:48

So you don’t have to actually completely rebuild your pipelines within Fabric just to get it in there.

50:55

Awesome.

50:55

Yeah, I knew that was available for some of the product.

50:59

So sounds like they’re also working on more, which is awesome.

51:05

Great.

51:05

Thanks, guys.

51:06

All right.

51:06

One last question here.

51:07

This might be a little provocative, but I’m going to ask it because it was asked which is Fabric not or isn’t Fabric just making it easier for end users to create and manipulate data on their own?

51:22

And so before you answer that question, the second part of this is or do we propose that these functions should really reside in a central location so that it’s not a complete Wild West out there.

51:33

And I know there are a lot of varying opinions in the world about whether analytics should be centralized or if it should be done by the people who actually need the data at the end of the day.

51:46

So I I’m going to let you guys tackle that to the degree that you that you wish.

51:52

Yeah.

51:52

So I love the term that was used Wild West because it’s actually something that we’ve used ourselves so that’s where governance and security comes in, right.

52:02

So it’s we won’t dive too deeply into that but we touched on how Microsoft Fabric can really empower users to do that end to end process right.

52:13

But that’s where governance and security really has to be developed talked about and adhered to.

52:19

So if you only want a few individuals and a personas to create pipelines that’s something that you can set up.

52:28

So yes, if you don’t set up proper governance and security, you could have a Wild West situation.

52:34

But as it goes for governance and security you can alleviate that, right.

52:41

And because everything’s under one roof that really does help.

52:46

I mean that’s our opinion at Senturus is that it is helpful to have all of those moving parts in one browser experience and to avoid that Wild West we spoke of which again I love that term, it’s it really just comes down to proper governance and security, right.

53:08

If I may add one quick thing, when it comes to really governing all of these capabilities and features across your organization, you really want to increase the communication and really the discussions you’re having among everyone because obviously just because the features and capabilities are there and they can be employed by everyone doesn’t necessarily mean they should.

53:35

So obviously it’s going to allow people that don’t usually get into things like maybe business users utilizing data science tools and things like that.

53:47

It can quickly kind of spin out of control if you just let everybody do it.

53:50

So really having open communication between the business users and your traditional IT folks is extremely important.

53:58

And so really just making sure that you’re utilizing security groups and Active Directory and really setting out a security schema before you get started because once you go down that road, it’s much more difficult to the reverse course.

54:18

So we highly recommend that you kind of outline that from the very beginning rather than just enabling it across the organization just because you can.

54:29

So thanks Steve.

54:32

That’s really a great point and I think this is quite a good spot for us to wrap up on today’s webinar.

54:40

But thank you everybody.

54:42

The participants and the our presenter Joey, as well as Guy and Steve, they’re answering questions for us in the background.

54:51

Thank you everybody for joining us.

54:52

If we didn’t get to your specific question during the session today, we will reach out to you afterwards to follow up.

54:59

So don’t worry about that.

55:01

As I mentioned before, you can download a copy of the deck at Senturus.com/resources.

55:06

You’ll also be able to get a recording of this webinar next week if you’re interested in that.

55:12

So with that, thank you as always for attending today’s webinar.

55:16

We hope to see you again on a future Senturus webinar.

55:19

And everyone have a great day.

55:21

Thanks.